Introduction

The X chromosome plays a unique role in evolution, being directly exposed to selection pressures due to its hemizygosity in males, leading to unique inheritance patterns. Regions of reduced diversity on the X chromosome could reflect the influence of strong evolutionary forces that are aided by structural features of the genome. Chromatin architecture, particularly the organization into A/B compartments, may provide insights into the functional basis of these patterns.

Here, I analyze the 3D chromatin structure of the X chromosome in rhesus macaque (Macaca mulata) using Hi-C data on the latest reference genome (rheMac10). The analysis extensively combines software tools that emphasize reproducibility, ensuring that the analyses are transparent, portable, and replicable.

Evolution on X

The production of gametes in a sexually reproducing organism is a highly complex process that involves numeruous elements. Spermatogenesis, the process of forming male gametes, involves four stages of differentiation from a germ cell through spermatogonia, pachytene spermatocyte, and round spermatids to spermatozoa (Wang et al. 2019), and is the basis of male reproduction. The specialized cell division of meiosis neatly handles the pairing, recombination, and segregation of homologous chromosomes, thereby ensuring proper genetic distribution. A thorough understanding of the molecular steps of reproduction and how genetic material is inherited is essential in biology, bringing insight to areas such as speciation, population diversity, and infertility.

Sex chromosomes differ from autosomes in several ways, primarily due to their unique inheritance patterns and copy number. The Y chromosome is present only in males, with a single copy per individual. The X chromosome, on the other hand, has a more complex inheritance pattern: males have one copy, while females have two. As a result, X chromosomes spend two-thirds of their time in females and only one-third in males. This skewed ratio influences the dynamics of selection on the X chromosome. Furthermore, in males, the single-copy nature of the X chromosome (hemizygosity) means that any mutations or loss of function are directly exposed, as there is no second copy to compensate. This concept also underpins Haldane’s rule (Haldane 1922), which states that in hybrids of two species, the heterogametic sex (e.g., XY in mammals, ZW in birds) is the first to exhibit reduced fitness, such as sterility, or to disappear entirely. Even a century later, the exact reasons for this phenomenon remain debated, with several hypotheses proposed. These include:

- Y-incompatibility: The Y chromosome must remain compatible with the X chromosome or autosomes.

- Dosage compensation: Hybridization may disrupt crucial dosage compensation mechanisms in heterogametic individuals.

- Dominance: Recessive deleterious alleles may cause sterility when expressed in the heterogametic sex.

- Faster-male evolution: Male reproductive genes may evolve more rapidly than female ones, leading to incompatibilities.

- Faster-X evolution: X-linked loci may diverge more quickly than autosomal loci, contributing to hybrid sterility.

- Meiotic drive: Conflicts between drivers and suppressors on sex chromosomes may result in sterility.

These hypotheses highlight the intricate interplay between sex chromosomes, selection, and hybridization (Cowell 2023). Furthermore, although this phenomenon is observed across various taxa and even kingdoms, the underlying explanations differ, and there is no universal consensus for all species. In many cases, multiple mechanisms from the listed explanations are thought to act in concert (Lindholm et al. 2016). The complexity of selection on the X chromosome remains an area of active research, with numerous studies suggesting strong selection pressures on the X chromosome across primates. This topic is explored in greater detail in the following sections.

Extended Common Haplotypes (ECH) on human X

Incomplete lineage sorting (ILS) occur when all lineages in a population are not completely sorted between two speciation events, resulting in a gene tree incongruent with the species tree (Mailund, Munch, and Schierup 2014). Briefly, it complicates phylogenetic inference as it implies that divergence time does represent speciation time. But, by solving the incongruency with a maximum likelihood approach (see Mailund, Munch, and Schierup 2014), the relation between the ILS proportion, \(p\), time between two speciation events, \(\Delta\tau\), and effective population size, \(N_e\), is given by the formula

\[ N_e = \frac{\Delta\tau}{2} \ln \frac{2p}{3}. \]

Then, by comparing the observed (local) ILS with the expected (e.g. a genomic average), a reduction in \(N_e\) can be used to infer selection. Additionally, as selective sweeps force lineages to coalesce, sweeps will also cause a reduction in ILS. Dutheil et al. (2015) found that the human X chromosome had reduced ILS compared to autosomes on a third of its sequence, fully explaining the low divergence between human and chimpanzees. Reduced ILS co-occur with reduced diversity across the X chromosomes of great apes, including human. Additionally, Neanderthal introgression was depleted in the same regions, and the authors suggest that they are a target of selection, as they rule background selection to be responsible for reduced ILS.

Low-diversity regions on the human X chromosome that also overlap with reduced human-chimp ILS have been hypothesized to arise from selective sweeps between 55,000 (archaic introgression after out-of-Africa event) to 45,000 (Ust’-Ishim man) years ago (Skov et al. 2023). The authors define the time-span by comparing haplotypes of unadmixed African genomes with admixed non-African genomes. Here, they locate megabase-spanning regions on the X chromosome where a large proportion of the non-African population have reduced archaic introgression, hypothesizing that selective sweeps have created these extended common haplotypes (ECHs). The ECHs span 11% of the X chromosome and are shared across all non-African populations. Similar to the low-ILS regions, ECHs exhibit a complete absence of archaic introgression (Neanderthal and Denisovan). Notably, the largest continuous ECH spans 1.8 Mb—more than double the size of the selective sweep associated with the lactase persistence gene, which represents strongest selective sweeps documented in humans (Skov et al. 2023; Bersaglieri et al. 2004). If such strong selection underlies the observed low diversity, it suggests that factors beyond fitness effects alone may be contributing to this pattern. This raises the possibility that the observed patterns of low diversity may result not only from strong selective sweeps but also from several factors in combination. Structural elements, such as physical linkage between the regions within ECHs, may facilitate coordinated selection, maintaining reduced diversity over extended genomic stretches. Additionally, sex-specific pressures, such as those linked to the unique transmission and evolutionary dynamics of the X chromosome, could contribute to this pattern. Further investigation is needed to disentangle the roles of these mechanisms in shaping the genomic landscape of the X chromosome.

Disproportionate minor parent ancestry on baboon X

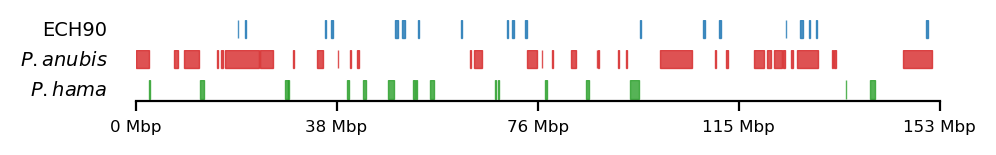

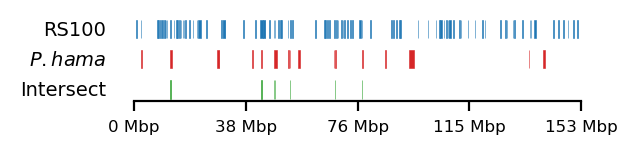

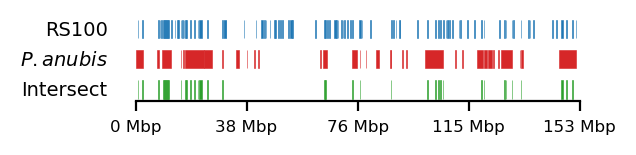

As mentioned above, low ILS and reduced diversity is consistent across all great apes, and thus the regions have been investigated in baboons as well. Sørensen et al. (2023) have mapped the interspecies gene flow between baboon genomes in their evolutionary history, which revealed male-driven admixture patterns in six different baboon species. The authors infer a male-driven admixture from mismatching phylogenies of the mitochondrial DNA and the nuclear DNA. This indicates a role of nuclear swamping, where the nuclear DNA from one species progressively replaces that of another through repeated hybridization and backcrossing, while the mitochondrial DNA, inherited maternally, remains unaffected. This pattern suggests that male-driven gene flow plays a significant role in shaping the genomic landscape of these regions, potentially impacting diversity and co-ancestry across species. Sørensen et al. (2023) compare the ratio of admixture between chromosome 8/X across the populations, which support the same pattern. Recently, in a yet unpublished analysis by Munch (2024), exceptionally large regions (up to 6 Mb, averaging 1 Mb) were identified on X in olive baboon (Papio anubis), where either all individuals had olive ancestry, or 95% of the individuals had hamadryas ancestry, significantly diverging from the background proportions, suggesting that these regions may be under strong selective pressures, are influenced by structural genomic features that limit recombination, or potentially both. Such patterns suggest that certain chromosomal regions are either preserved due to adaptive advantages or subjected to sex-biased gene flow, further reinforcing the impact of male-driven admixture on shaping genomic variation. These findings highlight the complexity of admixture dynamics and their potential to create localized genomic signatures that deviate from overall ancestry proportions.

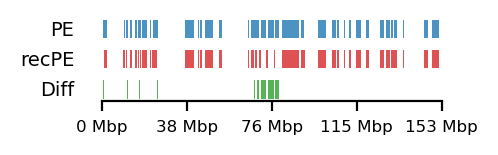

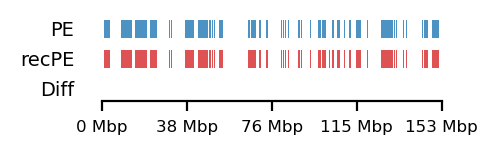

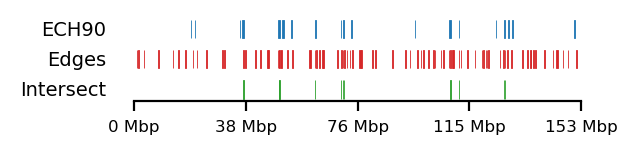

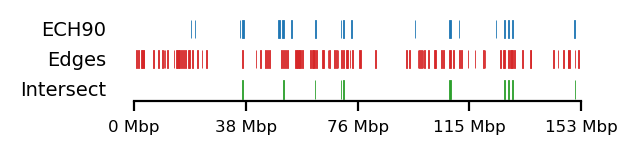

Sørensen et al. (2023) highlights the importance of studying these populations, as they serve as models for understanding genomic separation in archaic hominin lineages while inhabiting comparable geographic ranges. Studying closely related, extant primate species provides a unique opportunity to gain insight into how hominin (human, Neanderthal, Denisovan) phylogenies were formed. By analyzing the intricate effects of population structure, migration, and selection in these species, we can draw parallels and infer events in our own archaic evolutionary history. See Figure 1 for a genomic overview of the abovementioned sets of regions.

Chromatin Architecture

The specific regions under strong selection identified across primate species, including humans and baboons, are all located on the X chromosome and span megabase-scale genomic regions. As mentioned above, alternative mechanisms, such as the chromosomal architecture and its rearrangements, could play critical roles in facilitating, regulating, or buffering these regions from selective forces.

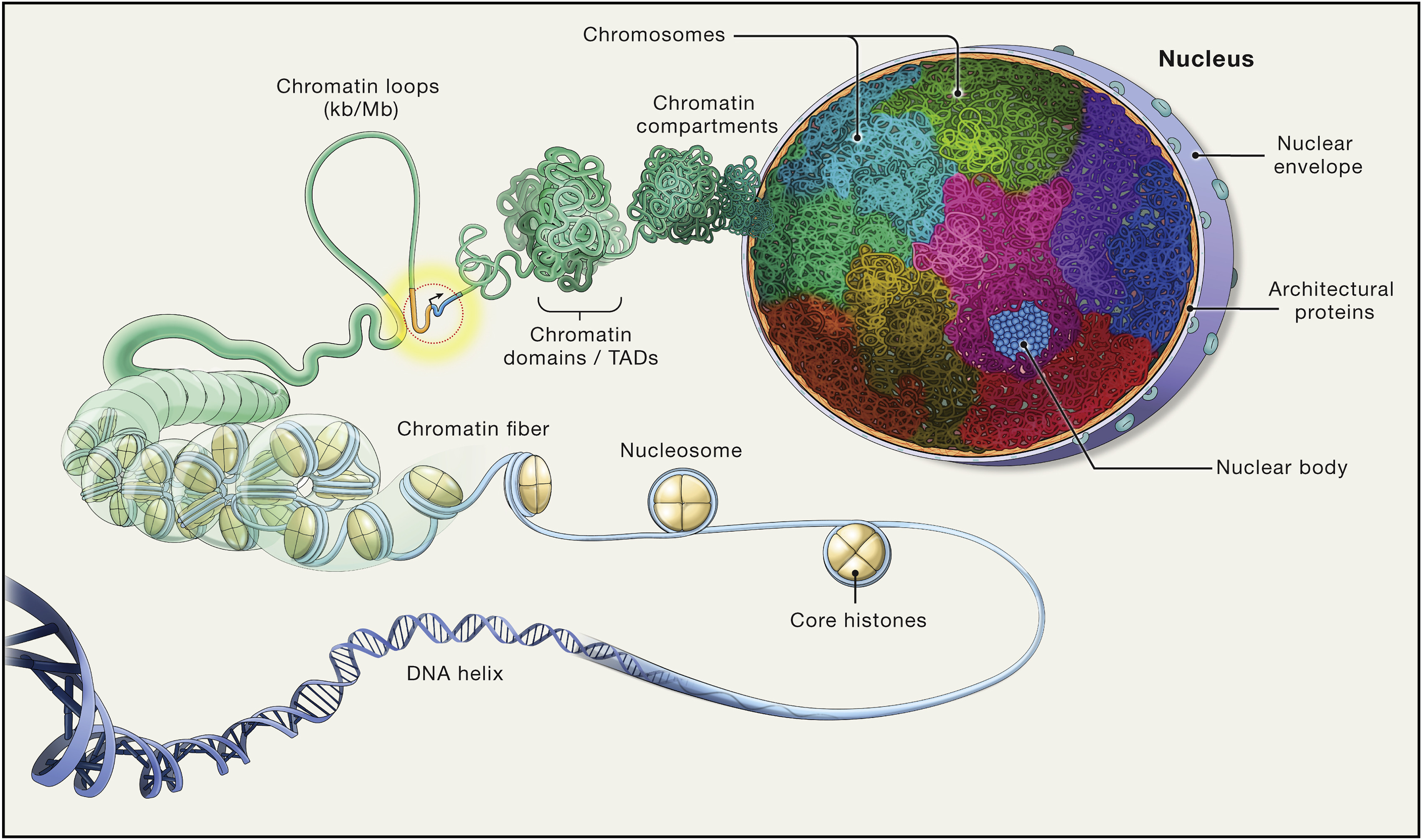

Therefore, understanding the genome organization and variation could gain insights regarding mechanisms of selection as well. Chromatin has long since been implicated in gene regulation and the functional state of the cell (Lieberman-Aiden et al. 2009), as the three-dimensional structure of the chromosome can bring distant regions in close proximity, and disrupting the organization can lead to development abnormalities (Dixon et al. 2015). Chromatin is hierarchically compartmentalized, meaning multiple orders of organizaion can be nested under each other (see Figure 2). At the large end, compartments can span multiple megabases (Lieberman-Aiden et al. 2009), and in the small end as little as 500 base pairs have been showed to aid subgenic structural organization. Even at sub-megabase scales, at the level of topologically associating domains (TADs) or chromatin loops (Ramírez et al. 2018; Zuo et al. 2021), the structure helps segregate regulatory elements and ensure proper function.

Analyzing 3D chromatin data from rhesus macaques, a widely used primate in experimental studies, could answer the question of whether regions under selection are intertwined with chromosomal organizational features. Studying the chromosomal architecture through gametogenesis, particularly spermatogenesis, could provide valuable insights about meiotic recombination and its relationship to genome organization. If they correlate with regions under selection across primates, we have new mystery to explain.

Wang et al. (2019) found that through the stages of spermatogenesis, chromatin compartmentalization undergo massive reprogramming, going from megabase-spanning compartments through smaller, refined compartments and back to the megabase compartments. The reprogramming is conserved between rhesus macaque and mouse, indicating a very important feature of the organization, but the author does not test whether genomic positions of the compartments are conserved between species. Extending their analysis of chromatin compartmentalization through spermatogenesis could make an obvious starting point for investigating the relationship between yet another set of megabase-spanning regions and those of strong selection identified above.

3C: Chromatin Conformation Capture

The first method developed to capture long-range interactions between pairs of loci was 3C, which uses spatially constrained ligation followed by locus-specific PCR (Lieberman-Aiden et al. 2009). Subsequent advancements introduced inverse PCR (4C) and multiplexed ligation-mediated amplification (5C). A limitation common to these methods is their inability to perform genome-wide, unbiased analyses, as they require predefined pairs of target loci.

DNA can be organized into different structural levels. 3C focuses on identifying the higher-order organization within the nucleus, such as when the 30 nm chromatin fiber folds into loops, Topologically Associating Domains (TADs), and chromatin compartments.

Hi-C: High-Throughput 3C

The introduction of the Hi-C (high-throughput 3C) method (Lieberman-Aiden et al. 2009) opened new possibilities for exploring the three-dimensional organization of the genome, as deep-sequencing technologies combined with high-performance computing allow for genome-wide analysis that is unbiased. Lieberman-Aiden et al. (2009) show that when combining spatially constrained ligation with deep parallel sequencing and subsequent analysis, we can infer distinct chromosomal territories as intrachromosomal contacts were significantly less abundant than interchromosomal contacts. The method also confirmed the spatial separation of two chromatin conformations in its active (open) state or inactive (closed) state, as they were significantly correlated with distances measured by fluorescence in-situ hybridisation (FISH). The two comformations were termed A- and B-compartments, respectively, and A defined to positively correlate with gene density. Finally, they showed that loci are physically more proximal when they belong to the same compartment implying that Hi-C reads serves well as a proxy for distance. Later, several smaller-order domains were inferred with the same method, such as topologically associating domains (TADs) and chromatin loops. Here, we narrow our focus on the largest of the structures, compartments, that is known to determine availability to transcription factors, thus making an A compartment active—and the B compartment inactive.

Hi-C Library preparation

A specialized protocol for preparing the DNA library is necessary (Lieberman-Aiden et al. 2009, fig. 1a). Briefly, formaldehyde is used to crosslink spatially adjecent chromatin. Restriction enzyme HindIII is used to digest the crosslinked chromatin, leaving sticky ends, 5-AGCT-3, that are filled and biotinylated with a polymerase (using either biotinylated A, G, C, or T). The strands are ligated in highly dilute conditions, which is favoring the ligation of the two crosslinked strands, forming chimeric, biotinylated strands. Upon ligation, the restriction site is lost as a biotinylated 5-CTAG-3 site (also referred to as the ligation junction) is formed. Lastly, the ligation junctions are isolated with streptavidin beads and sequenced as a paired-end library.

To be able to create stage-resolved Hi-C library of spermatogenetis, several steps have to be performed on the samples before crosslinking. First, the samples have to be treated immediately after harvesting to ensure viable cells. Secondly, the samples have to be purified to accurately represent each stage og spermatogenesis. Specifically, the data for this project (Wang et al. 2019, acc. GSE109344) preluded the library preparation protocol by sedimentation-based cell sorting to separate live spermatogenic cells into different stages of differentiation, namely spermatogonia, pachytene spermatocyte, round spermatid, and spermatozoa. Then, the cells were fixed in their respective state before crosslinking. The authors use their own derived method for library preparation, termed small-scale in-situ Hi-C, allegedly producing a high-quality Hi-C libary from as little as 500 cells (capturing the variance of millions of cells).

Initially, Hi-C library preparation was designed to generate molecules with only a single ligation site in each, but with advancements in sequencing technology (‘short-reads’ can now span several hundreds of base pairs) and the shift to more frequently cutting restriction enzymes for higher resolution results in multiple ligation events per sequenced molecule (Open2C et al. 2024), which is adressed in the section below.

Hi-C Data Analysis

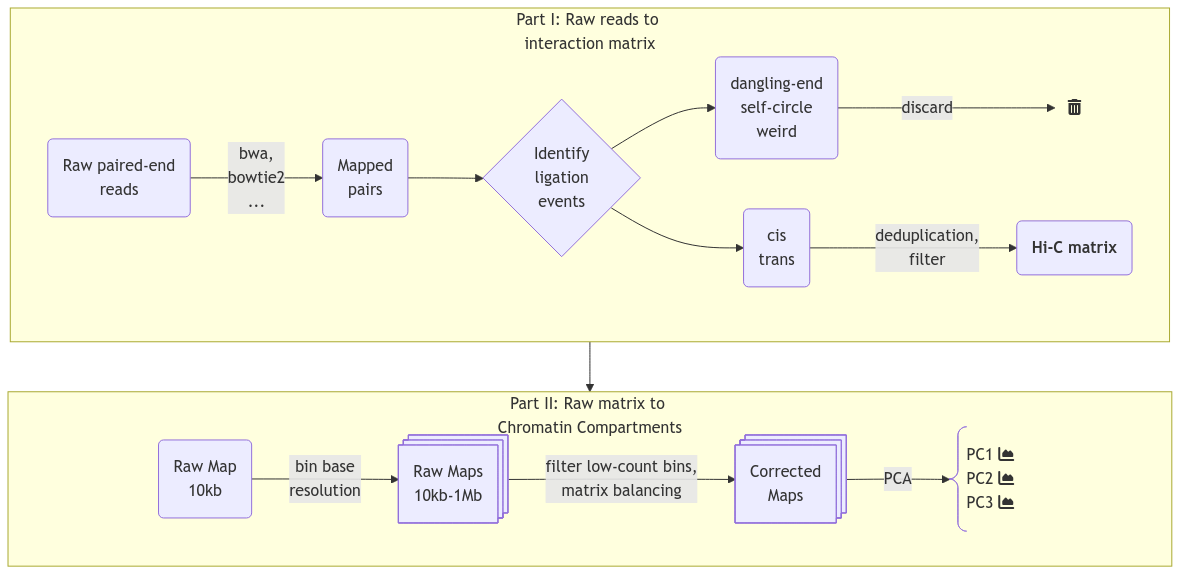

The analysis of the read-pairs of a Hi-C library is divided into several smaller tasks, see Figure 3.

The reads must be aligned to the reference in such a way that the intentional chimeric read-pairs (as per above-mentioned protocol) are rescued, and unintentional read-pairs are discarded. That is, we must make sure they represent ligation junction of adjecent chromatin segments, and not technical artefacts or unintentional (random) fusions of unrelated DNA.

Aligning the Hi-C reads

The main difference between Hi-C libraries and standard paired-end libraries is the high fraction of chimeric reads in Hi-C. As a contact pair is crosslinked and ligated before sequencing, chimeric reads occur as a feature, and standard mapping techniques seeks to filter out this type of reads (Lajoie, Dekker, and Kaplan 2015). Thus, we need specialized tools for rescuing chimeric reads. That said, we have to be cautious distinguishing the intended chimerism for Hi-C and that of technical artefacts. Any software for local alignment can be used for aligning reads from a Hi-C library. However, one should make sure to disable paired-end rescue mode if possible, otherwise each read in a pair (each mate) should be aligned separately (Lajoie, Dekker, and Kaplan 2015). This removes the assumption that the distance between mates fits a known distribution because the genomic sequences originate from a continuous DNA-fragment. For example, the bwa-mem (Li 2013) implementation of this (the -P option) activates the Smith-Waterman algorithm to rescue missing hits, but disables the search of hits that fit a ‘proper’ pair. After alignment, each read is typically assigned to the nearest restriction fragment to enable categorization of pairs into different categories.

Interestingly, this last step is not included by default in pairtools, as Open2C et al. (2024) observe very similar statistical properties on pairs that are either close or distant from the nearest restriction site. Thus, restriction fragment filters are not needed, and instead, a simple filter is applied against short-distance pairs that is automatically calibrated.

Identifying and Storing Valid Hi-C Pairs

One should be cautious when filtering invalid from valid pairs, as they are not easily distinguished. A ligation event will be categorized into one of five categories (see Table 1): dangling-end, self-circle, weird, intrachromosomal (cis), and interchromosomal (trans) (Bicciato and Ferrari 2022, Ch. 1). Either dangling-end or self-circle events are reported if a read-pair maps to the same restriction fragment depending on the orientation, and deemed uninformative (Lajoie, Dekker, and Kaplan 2015). Usually, weird events are demeed uninformative as well, as it is challenging to distinguish a sequencing error from the result of a diploid fragment. PCR duplicates should be discarded as well, having either identical genomic sequence, or sharing exact 5’ alignment positions of the pair (Lajoie, Dekker, and Kaplan 2015; Bicciato and Ferrari 2022, Ch. 1). The probability that such pairs are valid (i.e. there are multiple of the same pairs) is very low. We also have to distinguish between molecules with only a single ligation event (one-way contact) or multiple ligation events (multi-way contacts). For that, a descision should be made on whether to 1) discard molecules with multiple ligations, 2) report one of the ligations (e.g. the 5’-most in both directions), or 3) report all events on a molecule.

| Event name | Explanation |

|---|---|

| Dangling-end | Non-digested collinear fragments. Fraction can be high. |

| Self-circle | Collinear fragment(s) have circularized. Very low fraction could indicate unsuccesful ligation. |

| Weird | Mates have the same orientation on the reference. Is not possible with single copy fragment. Either sequencing errors or diploid fragments1. |

| Cis | Pairs from the same chromosome (intrachromosomal) |

| Trans | Pairs from distinct chromosomes (interchromosomal) |

Quality Control and Interaction Matrices

To determine the quality of the Hi-C library, most tools generate quality control log files at some point during the filtering steps, which can then be aggregated and analyzed (with e.g. MultiQC Ewels et al. (2016)). The ratios between the different ligation events can be informative about the quality of the Hi-C library. Here, both the distribution of discarded reads across categories, as well as the ratios between cis/trans interactions for a certain organism provide information about the library. For example, the biases of different aligners might be captured by comparing the reason why reads are discarded between two different aligners, as well as whether or not there is a preference of cis or trans in an aligner itself. This allows for evaluating the mapping parameters as well as the filters applied downstream. Additionally, \(P(s)\), the contact probability as a function of genomic separation can be inspected as it should decay with increasing distance. The trans/cis-ratio can sometimes be a good indicator of the noise level in the library, and additionally, the level of random ligation events can be quantified by counting the number of trans events occurring to mitochondrial genome. They should not occur naturally, as the mitochondrial genome is separated from the DNA in the nucleus. This method has some pitfalls that should be controlled for; some parts of the mitochondrial genome can be integrated into the host genome, and mitochondrial count may differ between cell-stages.

Typically, a filter against low mapping quality is applied on the data before constructing the interaction matrix (Hi-C matrix), and a conventional threshold is \(mapq < 30\) (Bicciato and Ferrari 2022). However, a considerable amount of reads do not pass that threshold, and thus we risk discarding potential valid information and should make sure to have enough data. Consequently, HicExplorer defaults to a lower threshold (\(mapq < 15\)), and pairtools enforces no filter by default, but recommends setting this manually (starting at \(mapq < 30\)).

A Hi-C interaction matrix simply maps the frequency of interactions between genomic positions in a sample. The maximum resolution of a Hi-C matrix is defined by the restriction enzyme, where the size of the restriction site (probabilistically) determines average space between each cut. With a 4 bp restriction site, the fragments will average \(4^4 = 256 bp\) and similarly \(4^6 = 4096 bp\) for a 6 bp restriction site. This leads to ~12,000,000 and ~800,000 fragments, respectively. Very deep sequencing is required to achieve enough coverage to analyze the interaction matrix at the restriction fragment resolution, but, usually, such high resolution is not required. Therefore, it is common practice to bin the genome into fixed bin sizes, which also enables a more efficient handling of the data if the full resolution is not needed (e.g. when plotting large regions such as a whole chromosome). The conventional format to store a Hi-C matrix, consisting of large multidimensional arrays, is HDF5. Each HDF5 file can store all resolutions and metadata about the sample, resolutions typically ranging from 10kb to 1Mb. Typically, the stored resolutions should be multiples of the chosen base-resolution, as the lower resolutions are constructed by recursive binning of the base resolution. cooler (Abdennur and Mirny 2020) neatly offers efficient storage with sparse, upper-triangle symmetric matrices and naming-conventions of the groups in their .h5-based file format, .cool, and they provide a Python class Cooler as well for efficiently fetching and manipulating the matrices in Python.

Inferring from the matrix (Calling Compartments)

The raw frequency matrices are generally not very informative, as the contact frequencies vary greatly between bins and contain biases in addition to the \(P(s)\) decay, which results in a diagonal-heavy matrix with high amount of noise the further we travel from the diagonal. Therefore, to analyze the three-dimensional structure of the chromatin, a method for correcting (or balancing) the raw Hi-C matrix has to be applied. It is unadvisable to correct low-count bins as it will greatly increase the noise, or to correct very noisy bins, or very high-count bins. Therefore, some bin-level filters are applied before balancing (Lajoie, Dekker, and Kaplan 2015);

- Low-count bins are detected by comparing bin sums to the distribution of bin sums with a percentile cutoff,

- Noisy bins are detected by comparing bin variance to the variance distribution of all bins (and percentile cutoff), and

- Outlier point-interactions are removed (a top-percentile of bin-bin interactions)

Iterative Correction and Eigendecomposition (ICE) (Imakaev et al. 2012) is a widely used method for correcting multiplicative biases in Hi-C data. ICE operates on the assumption that all loci should have roughly equal visibility across the genome, meaning that the total number of interactions per locus should be uniform. It corrects the raw interaction matrix by iteratively scaling rows and columns until they converge to a consistent coverage. By leveraging pairwise and genome-wide interaction data, ICE calculates a set of biases for each locus and normalizes the interaction frequencies accordingly. This process results in a corrected interaction matrix with uniform coverage, smoother transitions, and reduced visibility-related biases. Notably, ICE does not distinguish between different sources of bias, instead calculating a collective bias for each locus. Imakaev et al. (2012) show that known biases are factorizable by comparing their results to predictions of restriction fragment biases, GC content, and mappability from a computationally intensive probabilistic approach. By showing that the product of those known biases explain \(>99.99%\) of the variability in their bias estimation, they argue that both known and unknown biases will be captured with their iterative correction method (also denoted matrix balancing).

Even with a binned, filtered, and balanced matrix, we are still left with the challenge of translating the matrix into biologically relevant inferations. Importantly, we have to remember that the matrix arise from a collection of cells and that the interaction frequency cannot be translated to a fraction of cells. Additionally, the effect from averaging interaction patterns can cause both individual patterns to be burried and the average pattern to show a pattern that does not exist in any of the single cells. Therefore, when pooling matrices one must make sure that the samples are as similar as possible (e.g. the same differentiation stage and so on). We can also not distinguish interactions that either co-occur in the same cell or ones that are mutually exclusive. Lastly, the way interaction patterns are defined poses a challenge; we define the chromatin compartments to be the output of a method, the ‘E’ in ‘ICE’, eigendecomposition, not as a specific pattern that we can explicitly search for. Although experimentally verified to tightly correlate with chromatin states (Lieberman-Aiden et al. 2009), the inferred compartments vary with different methods of calculating the eigenvector, as dicussed in Section 2.3.0.9 and Section 3.2.3. To further complicate the challenge, interaction patterns on different scales co-exist and are difficult to disentangle without simplifying assumptions, such as assuming that small-scale interactions are not visible (or are negligible) at a certain resolution, or restricting the viewframe to eliminate large-scale variance between chromosome arms. It is by definition a speculative exercise to interpret the biological relevance of an observed pattern, but the consensus is to call compartments on interacting regions that arise from the eigendecomposition of a Hi-C matrix without further modifications (Lajoie, Dekker, and Kaplan 2015). Briefly, each genomic bin is assigned a value reflecting its compartment identity, where positive values indicate compartment A and negative values indicate compartment B. The interaction score between two loci is represented as the product of their compartment values, resulting in enriched interactions for loci within the same compartment (positive product) and depleted interactions for loci in different compartments (negative product). This model reproduces the checkerboard pattern characteristic of Hi-C data. Principal component analysis (PCA) identifies the first principal component, which optimally captures the strongest compartment signal. The sign of this component determines whether a locus belongs to compartment A or B. As the eigenvector is only unique up to a sign change, a phasing track is used to orient the eigenvector, aiming for a positive correlation with GC content (in mammals), so that A-compartments represent the active euchromatin, while B-compartments represent the closed heterochromatin.

Compartment Edges and Genomic Intervals

As arbitrarily as a compartment may be defined, we chose to define another genomic interval for analysis. It is well known that CTCF and other structural proteins preferentially binds to Topologically Associating Domains (Bicciato and Ferrari 2022, Ch. 3) (TADs; they were initially defined as sub-Mb chromatin structures (Lajoie, Dekker, and Kaplan 2015), but currently the definition seems to vary based on the method of extraction (Open2C et al. 2022)). Derived from this, we define a transition zone between A/B compartments to look for enrichment of specific regions of interest.

We can test if two sets of genomic intervals correlate (say, compartment edges and ECH regions) by either proximity of the non-intersecting parts of the sets, or by intersection over union (Jaccard index). When the underlying distribution of a statistic (or index) is unknown, a widespread method in statistics for estimating a p-value is by bootstrapping. Here, one of the sets are bootstrapped (the intervals are placed at random positions) a number of times, \(b\), and the fraction of statistics more extreme than the one we observe is reported as the p-value.

Reproducibility Infrastructure

Reproducibility is a cornerstone of the scientific method, ensuring that findings can be independently verified and built upon by others. Without it, the credibility of research findings is undermined, hindering scientific progress by becoming anecdotal. The necessity of reproducibility also aligns with scientific skepticism—the practice of critically evaluating evidence and methods to guard against biases or errors. As research grows increasingly computational, robust reproducibility infrastructure is essential to maintain these principles (Baker 2016), facilitating transparency, validation, and collaboration across diverse scientific disciplines. Thus, apart from the biological questions we seek to investigate and answer in this thesis, a major goal is to create fully (and easily) reproducible results through a self-contained and version-controlled pipeline using git (Torvalds and Hamano 2005), GitHub (GitHub, Inc. n.d.), quarto (Allaire et al. 2024), Anaconda (Anaconda Software Distribution 2016), gwf (GenomeDK 2023), and Jupyter (Kluyver et al. 2016). The result is a fully reproducible analysis, including figures and tables in only 3 lines of code2 (Listing 1) . See Table 2 for a brief overview:

| Tool | Description |

|---|---|

| Jupyter | Interactive coding environment for analysis and development (notebooks are natively rendered with Quarto) |

| Quarto | A Quarto Manuscript project nested inside a Quarto Book for rendering html (website) and PDF (manuscript) from Markdown via Pandoc. Supports direct embedding of output from Jupyter Notebook cells (plots, tables). |

| Conda | For managing software requirements and dependency versions reproducibly. |

| git | Version control and gh-pages branch for automated render of Quarto project |

| GitHub | Action was triggered on push to render the project and host on munch-group.org |

| gwf | Workflow manager to automate the analysis on a HPC cluster, wrapped in Python code. workflow.py currently does everything from .fastq to .cool, but notebooks can be set to run sequentially as part of the workflow as well. |

GWF: workflow management for High-Performance Computing (HPC)

To enable consistently reproducing the analyses, a workflow manager is utilized. Several exist, but the most well-known is likely Snakemake. However, we use the pragmatic (authors’ own words), lightweight workflow manager GWF, which is optimized for the GenomeDK insfrastructure, and has the benefit of in-house support.

Briefly, GWF works on a python script, conventionally workflow.py, that wraps all the jobs (targets in gwf lingo) you will submit to the HPC cluster. Each target is submitted from a template, written as a Python function, which includes inputs and outputs that GWF should look for when building the depency graph, options list of resources that is forwarded to the queueing system (Slurm in our case), and specs, specifying the submission code in Bash as a formatted Python string (meaning we can pass Python variables to the submission code), providing an extremely flexible framework for running large and intensive analyses in a high-performance computing environment.

Project Initialization

gwf

The initialization of the project directory is the basis of reproducibility and transparency, together with workflow.py inhabiting the main directory. Specifically, it includes a subdirectory for (intermediate) files that are produced by the pipeline, steps/. Everything in this directory is reproducible simply by re-running the gwf-workflow. It is thus not tracked by git, as the large files (raw reads, aligned read-pairs, etc.) it contains are already indirectly tracked (workflow.py is tracked). It can be safely deleted if your system administrator tells you to free up disk space, although you would have to run the workflow again to continue the analysis. Several directories are created for files that are not produced by the pipeline, that is, files that the workflow uses, configuration files, figures edited by hand, etc. Ideally, as few files as possible should be outside of steps/, to be as close as possible to an automated analysis.

Jupyter Notebooks

A notebooks/ subdirectory contains Jupyter notebooks that are named chronologically, meaning they operate on data located in either steps/ or generated from a previous notebook. This way, the workflow can also be set up to run the notebooks (in order) to produce the figures, tables, and their captions used in this manuscript.

Quarto

Quarto is an open-source scientific and technical publishing system that uses (pandoc) markdown to create and share production quality output, integrating Jupyter Notebooks with Markdown and LaTeX and enabling embedding content across .ipynb and .qmd. In .qmd, code chunks in several programming languages can be executed and rendered, including Python, R, mermaid (JavaScript-based diagramming). A Quarto project is configured with a YAML configuration file (_quarto.yml) that defines how output is rendered. In this project, we use a nested structure, nesting a Slides project and a Manuscript project inside a Book project. To manage the directory as a Quarto Book project, a quarto configuration file was placed at the base, defining how the Book should be rendered. Additionally, configuration files were placed in slides/ and thesis/, to render them as Quarto Slides and Quarto Manuscript, respectively. This nested structure lets us render different subprojects with different configurations than the main project, for example to generate the manuscript, a single Quarto Markdown file, in both .html and .pdf, and only including embedded outputs from specified cells from notebooks in the parent directory. Although the Quarto framework is extensive, it is still under development and has several drawbacks worth mentioning. First, one can only embed the output of code cells from notebooks, meaning the only way to embed text with a python variable (e.g. you want the manuscript to reflect the actual value of a variable, sample sizes n = [1000, 10000, 100000], and their respective outputs) is by converting a formatted python string into Markdown and send it to the output. Second, embedded figures will be copied as-is in the notebook, and thus cannot be post-processed with size or layout. This makes it impractical to e.g. use the same figures in slides and in the manuscript. Third, when rendering large projects that is tracked by git, some output files (that have to be tracked to publish the website) can exeed GitHub size limits. Especially if rendering in the jats format, producing a MECA Bundle that should be the most flexible way to exchange manuscripts. However, as not applicable to this thesis, the option was simply disabled. Fourth, some functionality relies on external dependencies that cannot be installed on a (linux) remote host (GenomeDK), such as relying on a browser for converting mermaid diagrams into png for the pdf-manuscript.

git and GitHub

To track the project with git and GitHub, the abovementioned structure was initialized as a GitHub repository, including a workflow for GitHub Actions to publish and deploy the website on the gh-pages branch when pushing commits to main. Briefly, it sets up a virtual machine with Quarto and its dependencies, renders the project as specified in the _quarto.yml configuration file(s), and publishes the project on the group website munch-group.org.

Methods

All computations were performed on GenomeDK (GDK), an HPC cluster located on Aarhus Uninversity, and most of the processing of the data was made into a custom GWF workflow, a workflow manager developed at GDK.

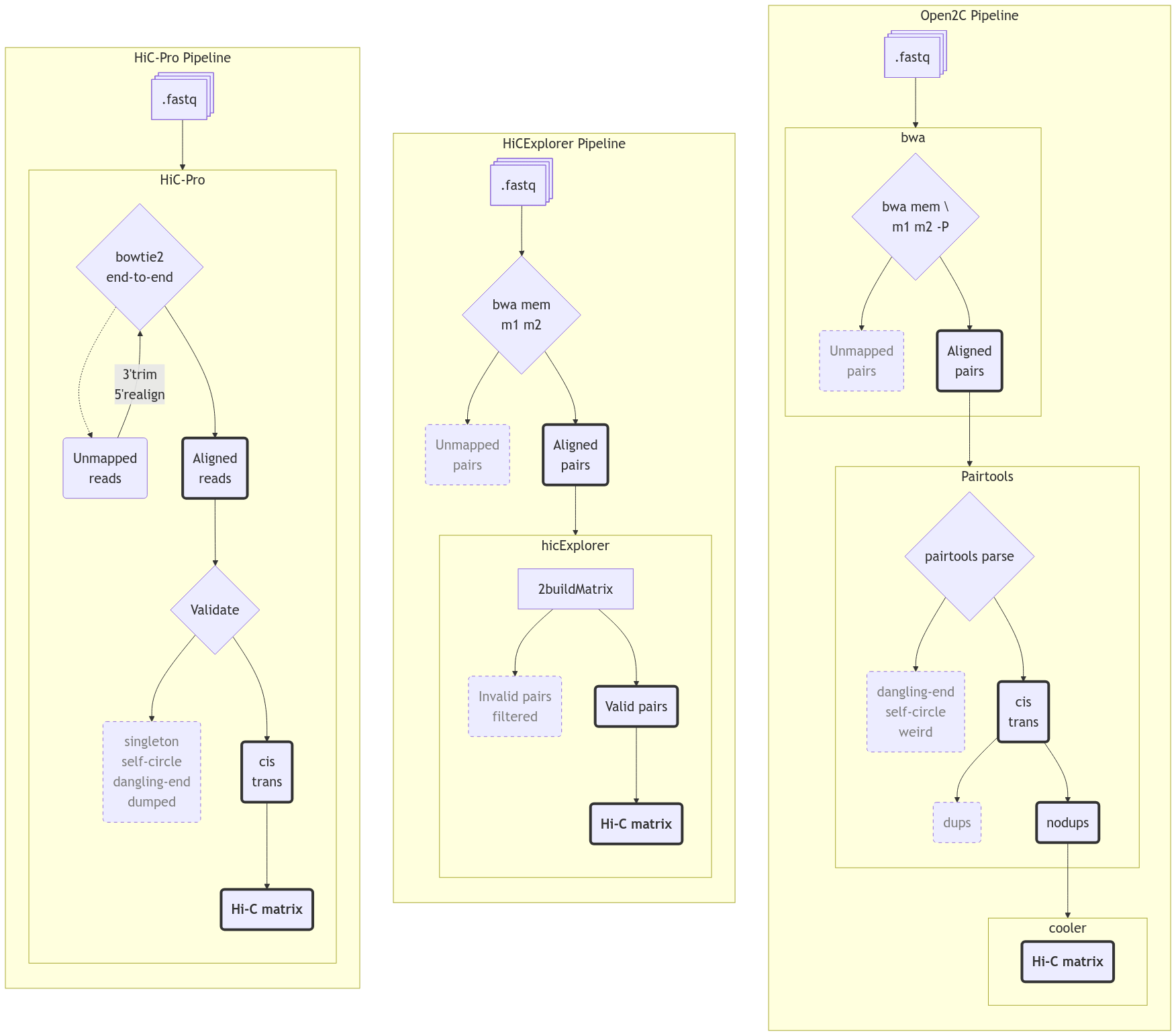

With the analysis tools determined in the above section, I decided it was not feasible to follow the exact approach as Wang et al. (2019) with any of HiCExplorer and Open2C, as they use a third software, HiC-Pro. For mapping the raw reads, Hic-Pro internally uses bowtie2 in end-to-end mode, followed by trimming the 3’-end of the unmapped reads, then remapping the 5’-ends to rescue chimeric fragments. I initially mapped the reads using end-to-end Bowtie2 without the rescue-remapping feature, resulting in a very high fraction of discarded reads. Manually implementing the remapping approach would be impractical. When reanalyzing data, it is important to use state-of-the-art tools. Considering the release timeline (e.g., HiC-Pro v3.1.0 in 2021), both HiCExplorer and Open2C are more recent and likely offer better support for current methodologies. Additionally, the HiC-Pro pipeline stops at a normalized contact map, and is thus not sufficient for downstream analysis. In hindsight, it would have been more sensical to use HiC-Pro to get normalized contact maps, then continue analyzing with cooler/cooltools, and finally compare the results evenly with the results achieved from using Open2C from start to finish. Figure 4 gives an overview of the 3 pipelines metioned in this report.

Fetching raw data

To reproduce the results from Wang et al. (2019), I chose to use their raw data directly from the SRA portal (Sayers et al. 2022). I filtered the data to contain all their paired-end Hi-C reads, and included only macaque samples. The dataset also contains RNAseq data, and the same tissues for both macaque and mouse. The metadata for the dataset was extracted into a runtable SRA-runtable.tsv. To get an overview of the data accessions used in this analysis, we will first summarize the runtable that contains the accession numbers and some metadata for each sample (Table 3). It adds up to ~1Tb of compressed fastq files, holding ~9.5 billion reads, roughly evenly spread on the 5 tissue types.

Fetching and indexing the reference

Wang et al. (2019) use the 2006-version of the macaque reference, rheMac2. Supporting my previous sentiment about not using outdated resources I find it most reasonable to use the latest reference, rheMac10, where Warren et al. (2020) have improved contiguity from rhemac8 by 120 fold, going from N50 contig size of 107 Kbp to 46 Mbp. Reproducing the analysis on the latest assembly of the macaque genome should therefore gain a more accurate read mapping, and consequently a better inference of the chromatin compartments. Therefore, rheMac10 was downloaded to GDK from UCSC web servers. To use bwa for mapping, rheMac10 needs to be indexed with both bwa-index with the --bwtsw option and samtools-faidx, which results in six indexing files for bwa-mem to use. Both bwa-mem and bowtie2 were used in different configurations, and bowtie2 requires its own indexing of the reference, using bowtie2-build with the --large-index option, which creates six index files for bowtie2 to use. The options --bwtsw and --large-index create the special indexing format required for large genomes such as macaque. As we use the position of the centromere to partition chrX into its two arms, and no comment about it was made by Warren et al. (2020), the centromeric region was inferred (visually) from the UCSC browser view of rheMac10, where a large continuous region (chrX:57.5Mb-60.2Mb) had no annotation and showed many repeating regions. The region is roughly the same region as inferred by Wang et al. (2019) by the same method.

HiCExplorer trials

To get aligned reads in a format compatible with HiCExplorer, the read mates have to be mapped individually to the reference genome. This supports the old convention to avoid the common heuristics of local aligners used for regular paired-end sequencing libraries (Lajoie, Dekker, and Kaplan (2015)). HiCExplorer provide examples for both bwa and bowtie2, so I used both with recommended settings. In both cases, the aligner outputs a .bam-file for each mate (sample_R1.bam and sample_R2.bam), and HiCExplorer performs the parsing, deduplication, and filtering of the reads and builds the raw interaction matrix in a single command,

hicBuildMatrix -s sample_R1.bam sample_R2.bam -o matrix.h5 [...]Figure 3 (middle) shows an overview of the pipeline. For parsing, the command needs a --restrictionCutFile, locating the restriction sites from the restriction enzyme used on the reference genome, which is generated with hicFindRestSites that operates on the reference genome and restriction sequence. The default filter, --minMappingQuality 15, was applied as described in Section 1.4.2.3. Notably, HiCExplorer has no options on handling multiple ligations, and the method is undocumented. I assume that Ramírez et al. (2018) have implemented a conservative handling (masking all multiple ligations), as that would have the least consequence.

For the initial exploration of methods with HiCExplorer, I chose five fibroblast samples (see Table 4). The goal was to replicate some of the figures from Wang et al. (2019) using HiCExplorer, especially to reconstruct interaction matrices and E1 graphs from macaque data. We constructed matrices with hicBuildMatrix as described from the separately mapped read-pairs. Along with the matrix .h5 file, a .log file was created as well, documenting the quality control for the sample. Multiple logs were aggregated and visualized with hicQC.

Before correction (or balancing) of the interaction matrix, a pre-correction filter is applied, filtering out low-count bins and very high-count bins. A threshold for Mean Absolute Deviation (MAD) is estimated by hicCorrect diagnostic_plot, followed by iterative correction with hicCorrect correct --correctionMethod ICE. The PCA was performed with hicPCA on the corrected matrices, yielding the first 3 PCs.

The matrix plotting function, hicPlotMatrix, plots matrices directly to .png, and so there is no output sent to the Jupyter display. As I kept the analysis in a Jupyter Notebook (using the buikt-in shell-escape commands to execute bash code), the plot files must be embedded back into the notebook. The command-line options for modifying plots are quite limited, such as adding spacing for a bigWig track with E1 values, including plot titles, or defining the size and resolution of the plot. I made a brief attempt to implement a custom plotting function for the .h5 matrices and bigWig tracks. However, this approach had a major limitation: it could not fetch specific regions from the matrix dynamically and instead required loading entire matrices into memory, including full-length chromosomes.

Open2C pipeline

A GWF workflow was created to handle the first part of the data processing, and each accesion number (read pair, mate pair) from the Hi-C sequencing was processed in parallel, so their execution was independent from each other. See Figure 3 (right) for an overview of the initial steps.

Downloading the reads

The reads were downloaded from NCBI SRA with SRA-toolkit (DevTeam 2024) directly to GDK using the docker image wwydmanski/sra-downloader as gunzipped .fastq files. Although possible to provide a list of accessions to the toolkit, I submitted each accession as a separate target, as SRA-Toolkit acts sequentially, and only starts the next download after all compression tasks were done. It was therefore a low-hanging fruit to parallelize the download for efficiency.

Mapping Hi-C reads

Suspiciously, (“Open2C” n.d.) do not mention any problems with aligning the Hi-C reads, they just provide an example using bwa-mem in paired-end mode and with the -P option set, which activates the Smith-Waterman (Li 2013) algorithm to rescue missing hits, by focusing on assigning only one of the mates to a good mapping and escape mate-rescue. The documentation of bwa state that both bwa-mem and bwa-sw will rescue chimeric reads. Consequently, Open2C does not have a buikt-in way of pairing the reads after mapping, and the reads were thus mapped using Open2C’s recommendations, using their established pipeline for producing a cooler. I chose the latter, where I mapped the fastq files to rheMac10 in paired end mode for a pair (\(m1\), \(m2\)) with bwa mem -SP rheMac10 m1 m2.

Parse and sort the reads

We need to convert the alignments into ligation events, and distinguish between several types of ligation events. The simplest event is when each side only maps to one unique segment in the genome ‘UU’. Other events, where one or both sides map to multiple segments or the reads are long enough (\(>150bp\)) to contain two alignments (multiple ligations) have to be considered as well. Multiple ligations are called walks by Open2C, and are treated according to the --walks-policy when parsing the alignments into valid pairs (or valid Hi-C contacts). Here, mask is the most conservative and masks all complex walks, whereas 5unique and 3unique reports the 5’-most or 3’-most unique alignment on each side, respectively, and all reports all the alignments. The pairs are piped directly into pairtools sort after parsing, as the deduplication step requires a sorted set of pairs. The .pairs-format produced by pairtools is an extension the 4DN Consortium-specified format, storing Hi-C pairs as in Table 5.

| Index | Name | Description |

|---|---|---|

| 1 | read_id | the ID of the read as defined in fastq files |

| 2 | chrom1 | the chromosome of the alignment on side 1 |

| 3 | pos1 | the 1-based genomic position of the outer-most (5’) mapped bp on side 1 |

| 4 | chrom2 | the chromosome of the alignment on side 2 |

| 5 | pos2 | the 1-based genomic position of the outer-most (5’) mapped bp on side 2 |

| 6 | strand1 | the strand of the alignment on side 1 |

| 7 | strand2 | the strand of the alignment on side 2 |

| 8 | pair_type | the type of a Hi-C pair |

| 9 | mapq1 | mapq of the first mate |

| 10 | mapq2 | mapq of the second mate |

I initially used --walks-policy mask, and later followed the recommendations from pairtools, specifically informing that longer reads (\(>150bp\)) might have a significant proportion of reads that contain complex walks. With this in mind and as the average read-length of our data is 300 bp, I decided to re-parse the alignments into a new set of pairs, and equally apply the recommended filter (next section). As both results are saved, we can compare the two approaches.

Filter and deduplicate pairs

Pairtools comes with a de-duplication function, dedup, to detect PCR duplication artefacts. At this point I removed all reads that mapped to an unplaced scaffold. Even though rhemac10 is less fragmented than rhemac8 , rheMac10 still contains more than 2,500 unplaced scaffolds, which are all uninformative when calculating the chromatin compartments as is the goal of this analysis. Therefore, we simply only include the list of conventional chromosomes (1..22, X, Y) when doing the deduplication. Initially, the default values were used to remove duplicates, where pairs with both sides mapped within 3 base pairs from each other are considered duplicates. cooler recommend to store the most comprehensive and unfiltered list of pairs, and then applying a filter it on the fly by piping from pairtools select. The first run that was parsed with mask was not filtered for mapping quality. After reparsing the alignments and applying the same analysis, we compare the two pipelines. A quality control report is generated by pairtools dedup as well, and the reports are merged and visualized with MultiQC (Ewels et al. 2016) for each cell type.

Create interaction matrices (coolers)

The final part of the GWF workflow takes .pairs as input and outputs a .cool file (a cooler). Initially, I read directly from the newly generated deduplicated pairs without additional filtering, but the official recommendation is to filter out everything below \(mapq = 30\) by piping the pairs through pairtools select "(mapq1>=30) and (mapq2>=30)" to cooler cload pairs. I re-parsed the alignments and created new coolers, including only the Hi-C contacts where \(mapq \leq 30\), following the current recommendations from Open2C.

Pooling samples (Merging coolers)

The samples are grouped into replicates with a unique BioSample ID, and I chose to pool all the interaction matrices for each cell type. Even though it is slightly unclear how their replicates are defined, Wang et al. (2019) determine compartments to be highly reproducible between replicates, and they are pooling the replicates as well.

cooler merge was used to merge all samples in each cell-type directory to just one interaction matrix for each cell type. The function merges matrices of the same dimensions by simply adding the interaction frequencies of each genomic position together, resulting in less empty or low-count bins.

Create multi-resolution coolers (zoomify)

A feature of working inside the ecosystem of Open2C is that it natively provides support for storing sparse interaction matrices in multiple resolutions in the same file by adding HDF5-groups to the (multires-)cooler. We can then efficiently store resolutions (i.e., different bin sizes) that is multiples of the smallest bin size. We chose to use 10kb, 50kb, 100kb, and 500kb bins, and the resolutions are made by recursively binning the base resolution. Abdennur and Mirny (2020) call this process zoomifying, and cooler zoomify does the job (it recursively calls cooler coarsen to merge bins).

Matrix balancing (Iterative correction)

Finally, we balance (or correct) the matrices using the cooler CLI. We use cooler balance with the default options which iteratively balances the matrix (Iterative Correction).

We balance the matrices on each resolution, and thus it cannot be done prior to zoomifying. Abdennur and Mirny (2020) state that the balancing weights are resolution-specific and will no longer retain its biological meaning when binned with other weights. Therefore, we apply cooler balance to each resolution separately. cooler balance will create a new column in the bins group of each cooler, weight, which can then be included or not in the downstream analysis. This means we will have access to both the balanced and the unbalanced matrix.

The default mode uses genome-wide data to calculate the weights for each bin. It would maybe be more suitable to calculate the weights for cis contacts only, and that is possible through the --cis-only flag, and that can be added to another column, so that we can compare the difference between the two methods easily. However, when adding the option, the process seemed to stall and had to be terminated manually, and it was not investigated further.

Eigendecomposition

The eigendecomposition of a Hi-C interaction matrix is performed in multiple steps. As value of the eigenvector is only significant up to a sign, it is convention to use GC content as a phasing track to orient the vector. E1 is defined to be positively correlated with GC content, meaning a positive E1 value signifies an active chromatin state, which we denote a A-type compartment (or simply A-compartment). We performed eigendecomposition of two resolutions, 100 kbp and 500 kbp. Wang et al. (2019) briefly describes their method to calculate the eigenvectors as a sliding window approach on the observed/expected matrix in 100 kb resolution summing over 400 kb bins with 100 kb step size, a method I was not able to replicate in the Open2C ecosystem. I decided to mimic this by smoothing the 100 kb E1 values by summing to 500 kb bins in steps of 100 kb, yielding a comparable resolution which I denote ‘pseudo-500 kb’ resolution (ps500kb).

First, we calculate the GC content of each bin of the reference genome, rheMac10, which is binned to the resolution of the Hi-C matrix we are handling. It is done with bioframe.frac_gc (Open2C). To calculate the E1 compartments, we use only within-chromosome contacts (cis), as we are not interested in the genome-wide contacts. cooltools.eigs_cis will decorrelate the contact-frequency by distance before performing the eigendecomposition. eigs_cis needs a viewframe (view) to calculate E1 values, the simplest view being the full chromosome. However, when there is more variance between chromosome arms than within arms, the sign of the first eigenvector will be determined largely by the chromosome arm it sits on, and not by the chromatin compartments. To mitigate this, we apply a chromosome-arm-partitioned view of the chromosome.

Additionally, to mimic the Local PCA from (Wang et al. 2019), I also defined a view of 10 Mb bins. Thoughout the project, I will compare results from each of the three views and resolutions, and they will be referred to as ‘full’, ‘arms’, and ‘10Mb’ views.

Plotting matrices

We use matplotlib (Team 2024) and seaborn (Waskom 2021) to plot in the Open2C framework. Utilizing the Cooler class, we can fetch regions of the matrix without modifying the file. As my analysis is centered around the X chromosome, it is efficiently handled by simply fetching ‘chrX’ from the matrix with cooler.Cooler.matrix().fetch('chrX'). Many methods of the cooler class returns data selectors, which do not retrieve data before it is queried (Abdennur and Mirny 2020). This means we can create many selectors at once without overflowing memory, enabling us to plot multiple interaction matrices side-by-side, e.g. the corrected and un-corrected matrices. This is easily done with the balance parameter of the matrix selector (.matrix()), which determines if it should apply the balancing weights to the coordinates and defaults to True.

The matrix is retrieved and plotted with matplotlib.pyplot.matshow, which automatically produces a heatmap image of the matrix. Here, instead of transforming the interaction matrix, the color scale is log-transformed with matplotlib.colors.LogNorm. Additionally, cooltools comes with more tools to aid visualization: adative coarsegrain and interpolation, which can be chained. adaptive_coarsegrain iteratively coarsens an array to the nearest power of two and refines it back to the original resolution, replacing low-count pixels with NaN-aware averages to ensure no zeros in the output, unless there are very large regions that exceed the max_levels threshold, such as the peri-centromeric region.

I implemented a plotting utility, plot_for_quarto in notebook 07_various_plotting.ipynb that is compatible with the YAML cell-options read by Quarto’s embed shortcode. It will take an arbitrary number of samples and plot a chromosome (or region) with or without its respective E1 value for either of the three viewframes which has been created. The input is a (subsetted) pandas DataFrame, defined from a file search matching a pattern specified to the glob Python module.

Genomic Intervals

Full Regions, Edges, and Limits

From the eigenvectors, the A-compartments were extracted in bedgraph-format (['chrom', 'start', 'end']) and compared with ECH90 regions lifted to rheMac10 from human. We perform visual inspection of the genomic intervals and test whether ECH90 regions are enriched near the edges of the compartments by defining a 200 kilobase transition-zone centered at each sign change of E1 (referred to as compartment edge). We compare genomic intervals (or sets) both visually by plotting the regions, and by a proximity test and bootstrapping the Jaccard index. Additionally, the compartment limits were invetigated (only 1 flanking base pair) in the same manner. Additionally, the limits were extracted from the baboon datasets and lifted to rheMac10 for comparison.

Proximity test

Determines whether the non-overlapping segments of the sets are more proximal than expected by chance. We define the annotation set and the query set, and the distance from each interval on the query to the most proximal interval on the annotation is used to generate an index of proximity by the mean distance to nearest interval in the annotation. Then, bootstrapping (\(b = 100000\)) is performed by randomly placing the query intervals to generate the null distribution, and finally, the fraction of the null as extreme or more extreme as our observed proximity is reported as the p-value.

Jaccard test

Measures the significance of the observed Jaccard index (intersection over union) between two sets. The index is a measure of similarity (\(intersection/union\)) between two sets (between 0 and 1), which is very sensitive to the size difference between the sets, as even when comparing a set of intervals to a small subset of itself will yield a very small Jaccard index. When we use bootstrapping to generate a null distribution (shuffling the intervals of the query), we find the probability that the two sets (with their respective number and size of intervals), are as similar or more than what we observe. The ratio is reported as the p-value. However, this approach is still sensitive to flipping of query/annotation (if the regions are not the same size), as only the query is bootstrapped.

Multiple testing

Considerations were made to avoid multiple testing biases (p-hacking): Performing tests on all combinations of variables (cell type, resolution, viewframe, query) will yield 90 p-values for each test, and we would have to adjust the significance threshold (with \(\alpha = 0.05\), we expect 4 tests passing the threshold by chance). However, I will narrow down the parameter space based on visualizations and reasoning, leaving fewer combinations to test. I do not find it necessary to correct the conventional significance threshold, \(\alpha=0.05\).

Results

HicExplorer Trials

Quality Control

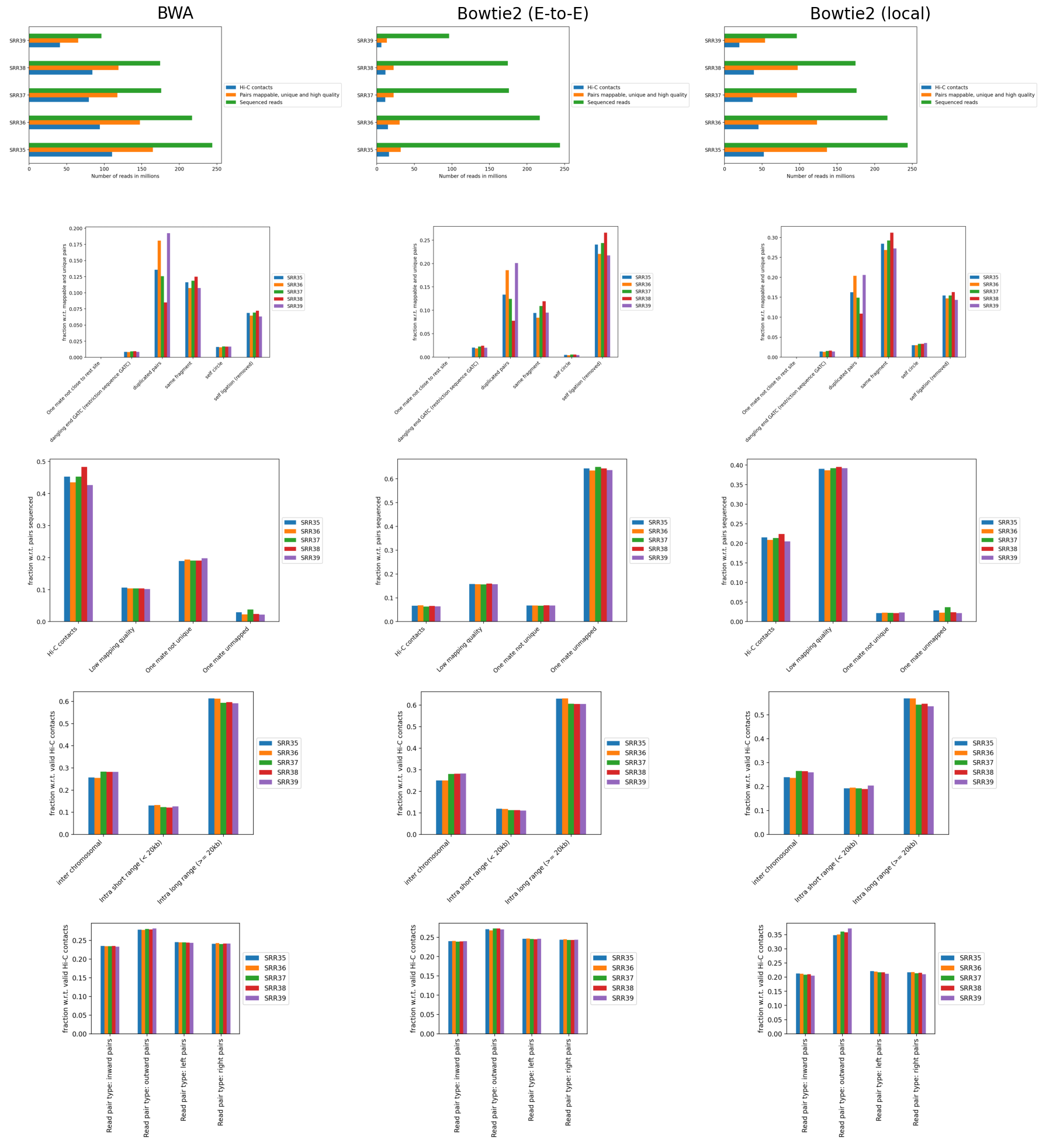

The separately mapped read-mates were parsed into a .h5 interaction matrix by hicBuildMatrix, which include a .log file documenting the buikt-in quality control (hereafter, QC). Log files from the 5 samples were merged with hicQC (Figure 5). I observe equal fractions of the read-orientation of read-pairs (Figure 5, left, row 5), which is expected for a good Hi-C library. Additionally, it determines between 40% to 50% of the total reads to be valid Hi-C contacts (Figure 5, left, row 1), which is usually only 25%-40% (as described in HiCExplorer docs). Figure 5 (left, row 4) shows, however, unusually high fractions of inter-chromosomal contacts (up to 30%) compared to intra-chromosomal contacts (also denoted trans and cis contacts, respectively). It is expected that cis contacts are orders of magnitude more frequent than trans contacts (Bicciato and Ferrari 2022, 2301:236; Lieberman-Aiden et al. 2009), and HiCExplorer states it is usually below 10% for a high-quality library. The high fraction may be mitigated by enforcing a stricter mapq threshold for a valid Hi-C pair, as we also observe higher-than expected valid contacts. However, we continue with the current matrices.

To compare how well these mappings perform, the plotting QC results is an easy way. Therefore, the reads were mapped with bowtie2 in both end-to-end- and local-mode followed by hiCBuildMatrix, and the QC from each method was plotted next to each other (Figure 5). Interestingly, bowtie2 was much more computer-intensive in both modes, perhaps because of the --very-sensitive option. In any case, the QC reveals a major difference in the total number of reads that are determined to be valid Hi-C contacts by hicBuildMatrix. As expected, mapping with end-to-end-bowtie2 makes locating Hi-C contacts more difficult than the other methods (Figure 5, row 1), finding a very low amount of mappable, unique pairs passing the quality threshold. In contrast, mapping with local-bowtie2 performs similarly to bwa in finding mappable, unique, high-quality pairs, but calls only approximately half the number of valid Hi-C contacts (>20%), resulting in a fraction of valid Hi-C pairs that hits the expectation from HicExplorer docs (row3). With bwa, the reads were discarded either due to low mapping quality or non-unique mates, whereas with local-bowtie2, the reads were almost exclusively filtered out due to low mapping quality. This must be a result of how the mappers assign mapping quality, and I believe local-bowtie2 looks suspiciously selective in finding unique but low quality alignments. end-to-end-bowtie almost exclusively filters out read-pairs where one mate is unmapped, which is expected when the majority of reads are unmapped.

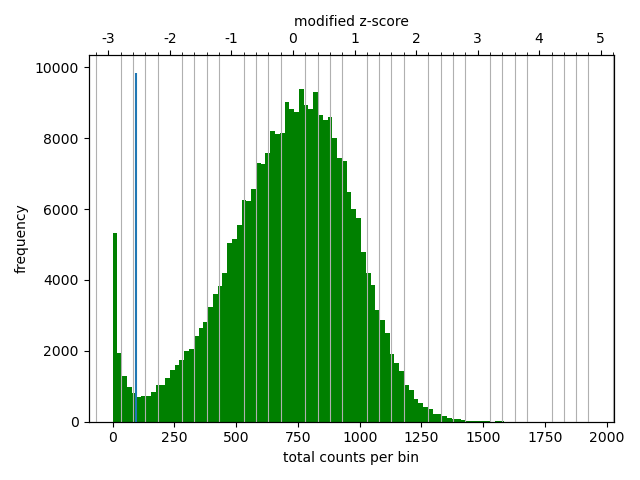

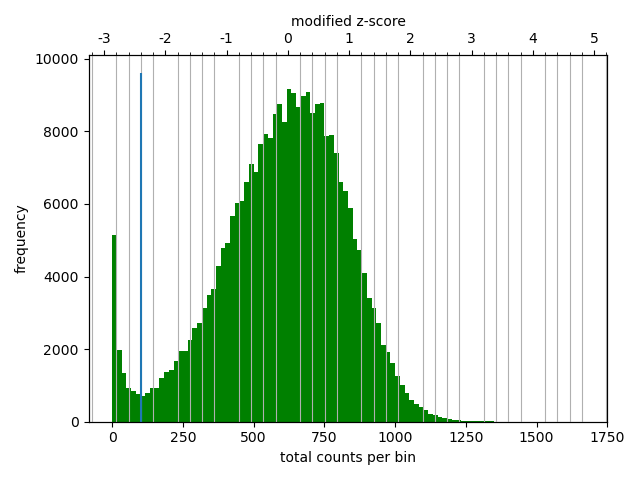

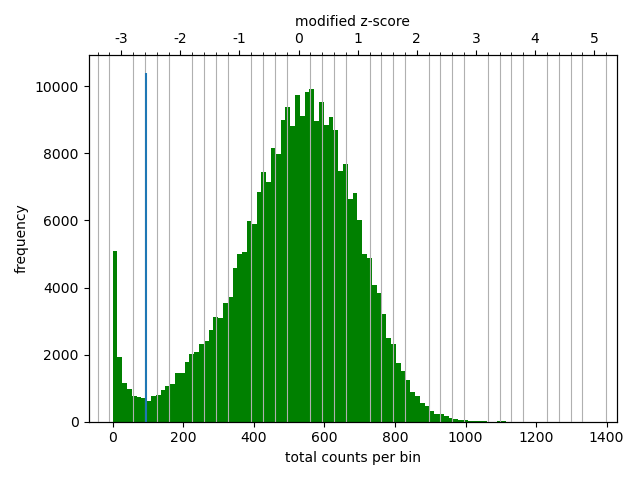

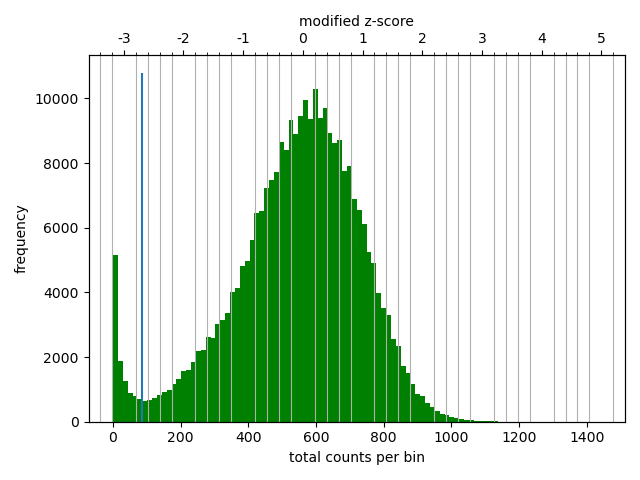

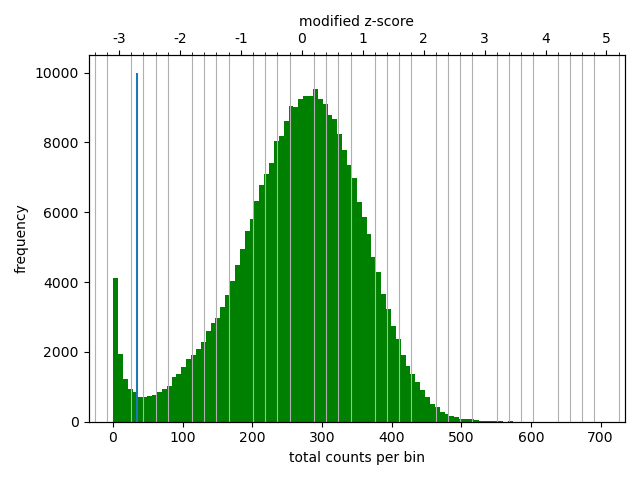

Correction

The correction diagnostic tool yielded a similar mad threshold within the range \([-3,-2]\). Even so, I followed the HicExplorer recommendation to set the lower threshold to at least -2 and the upper threshold to 5 in the pre-normalization filter. I argue that with a high number of valid contacts, it is safer to err on the side of caution and maybe filter out bad data.

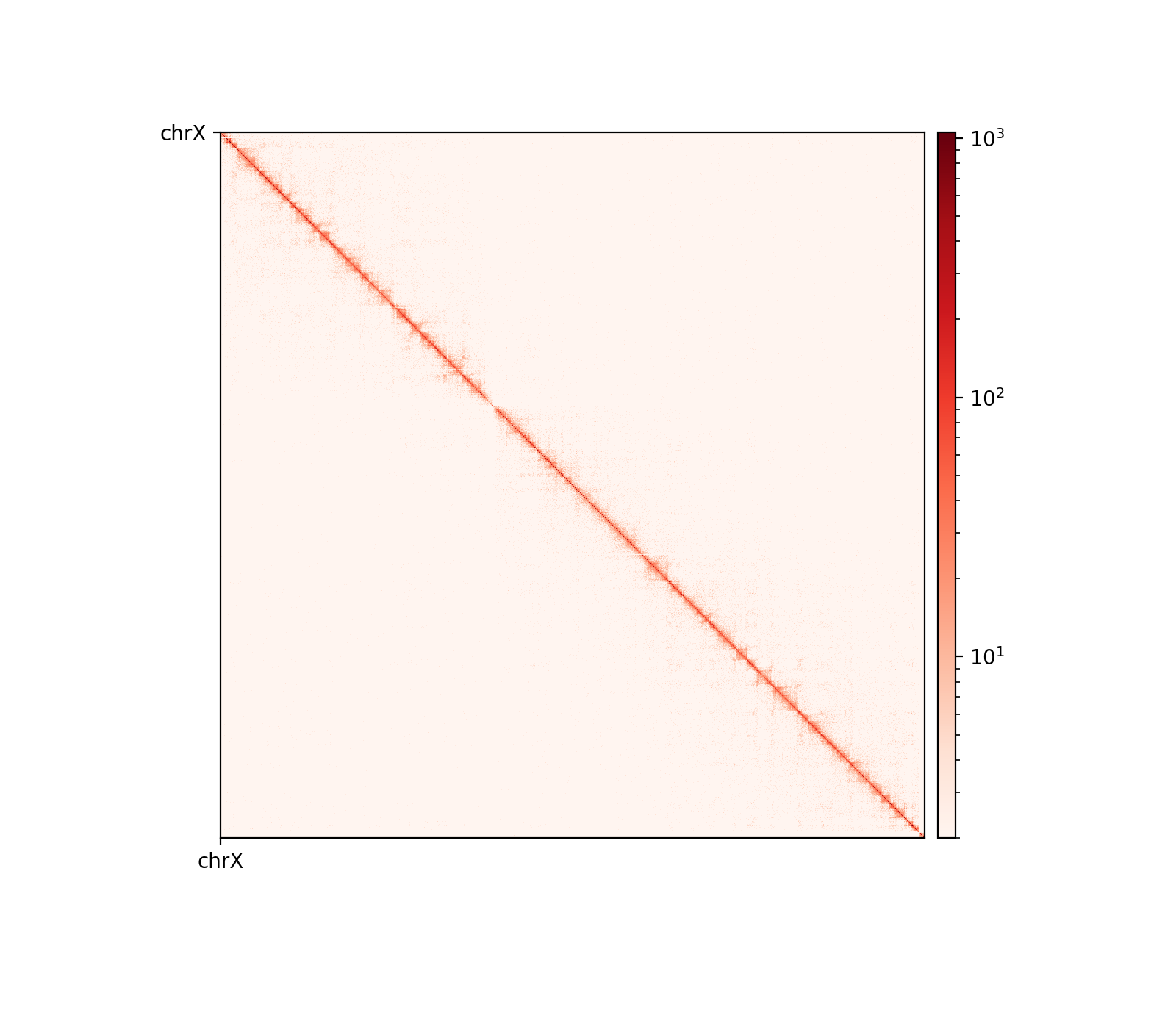

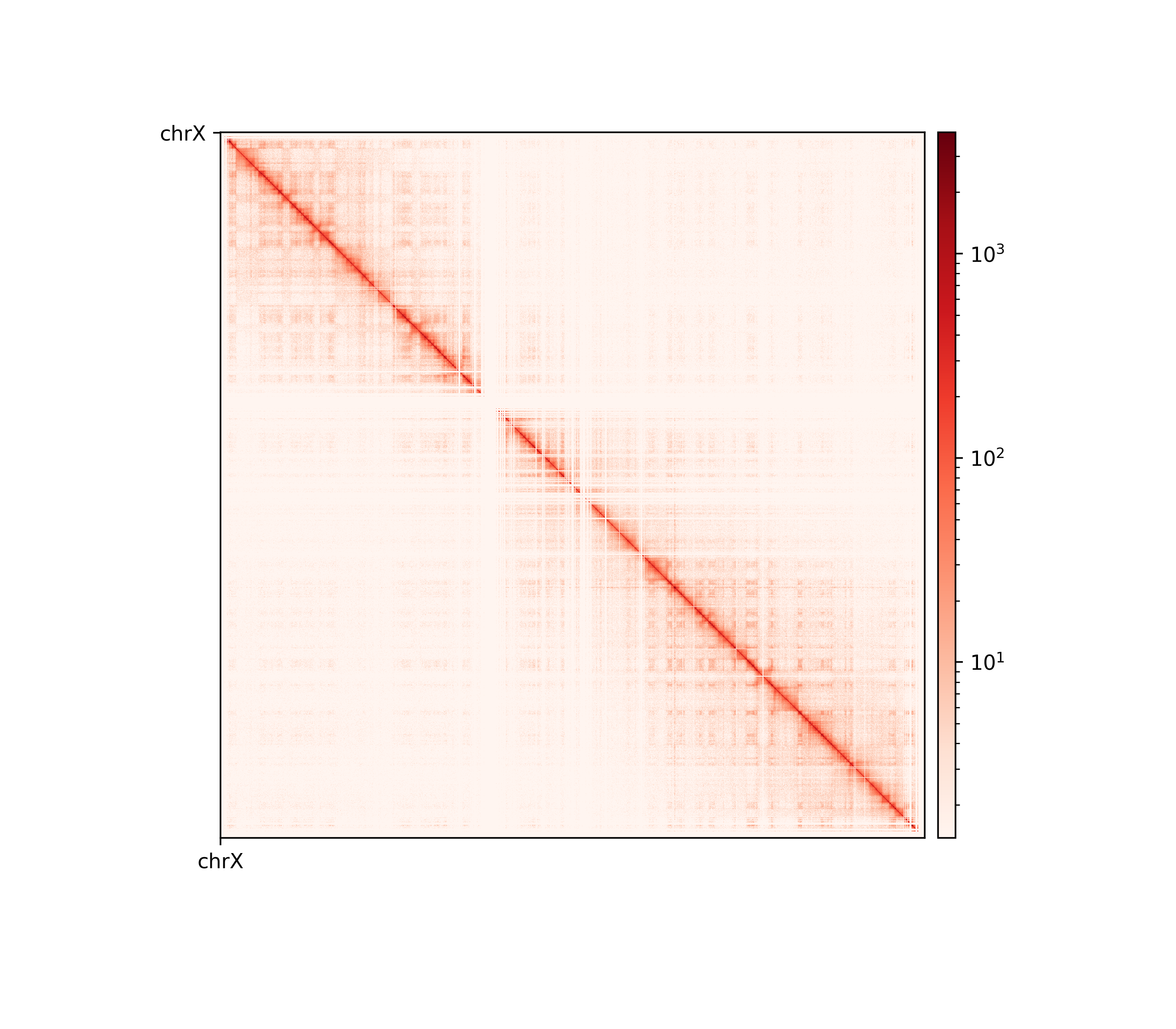

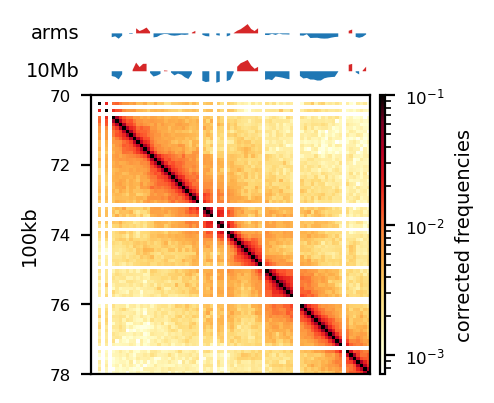

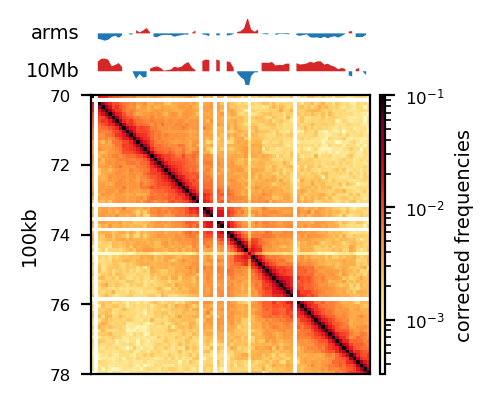

The five samples were pooled with hicSumMatrices, and the non-standard contigs (unplaced scaffolds) were filtered out, and the different resolutions were created (hicMergeMatrixBins). HiCExplorer also comes with a normalization function prior to correcting the matrix, which should be applied if different samples should have comparable bin counts. It has no effect when having only one matrix. Nevertheless, the pooled matrix was normalized and then corrected compared in Figure 7. It is now obvious why we have to correct the matrix. The uncorrected (Figure 7 (a)) has no signal apart from the diagonal. Even though some bins have been filtered out, the expected plaid pattern of a contact matrix is visible along the diagonal after the correction (Figure 7 (b)), leaving evidence for chromatin structure, especially in the first 50 million bases of the chromosome. There is a wide region of empty values at the place of the centromere.

Eigenvectors

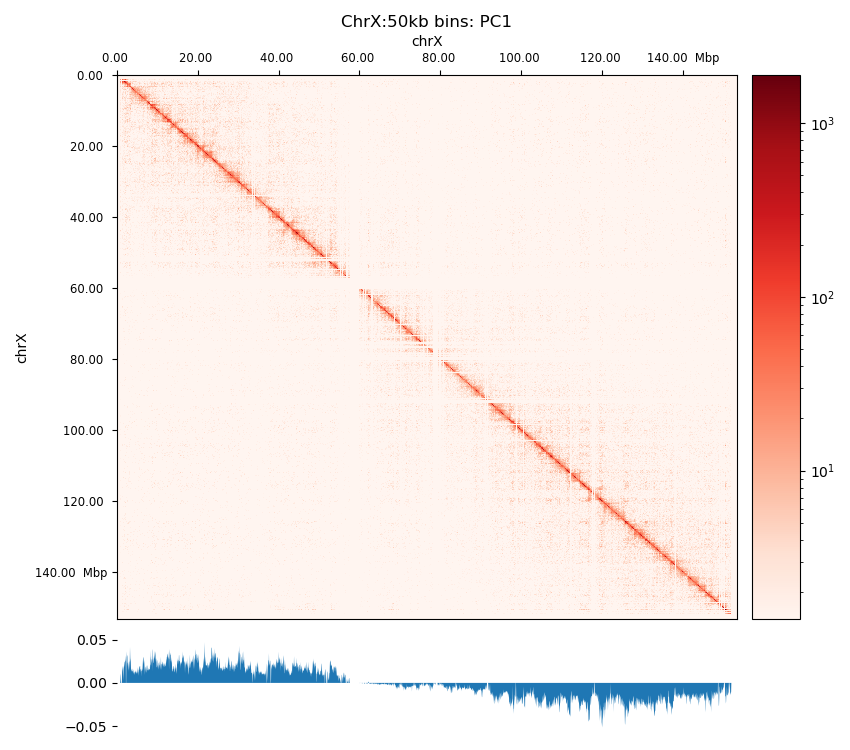

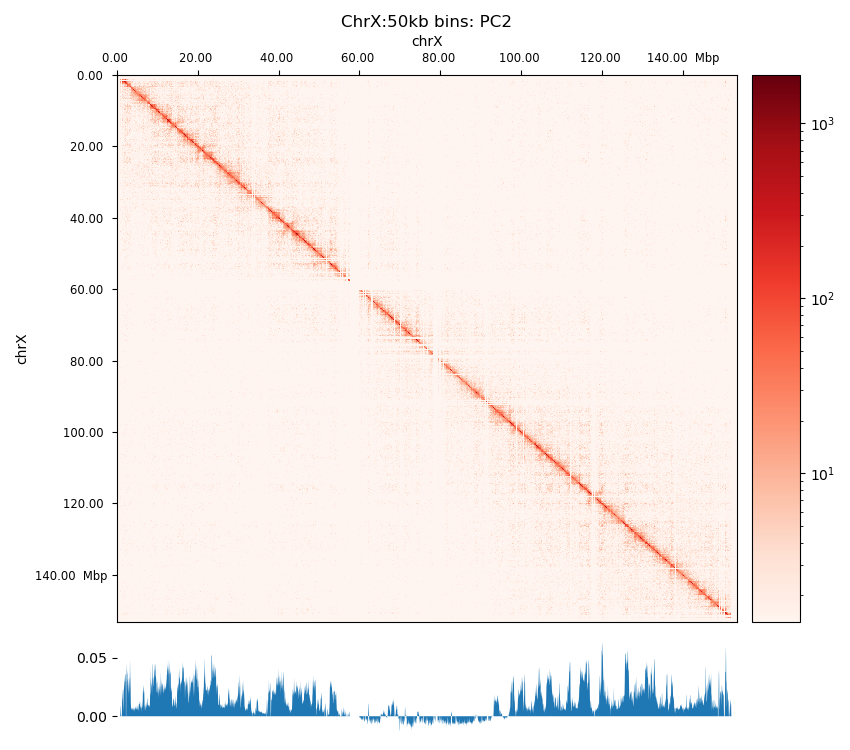

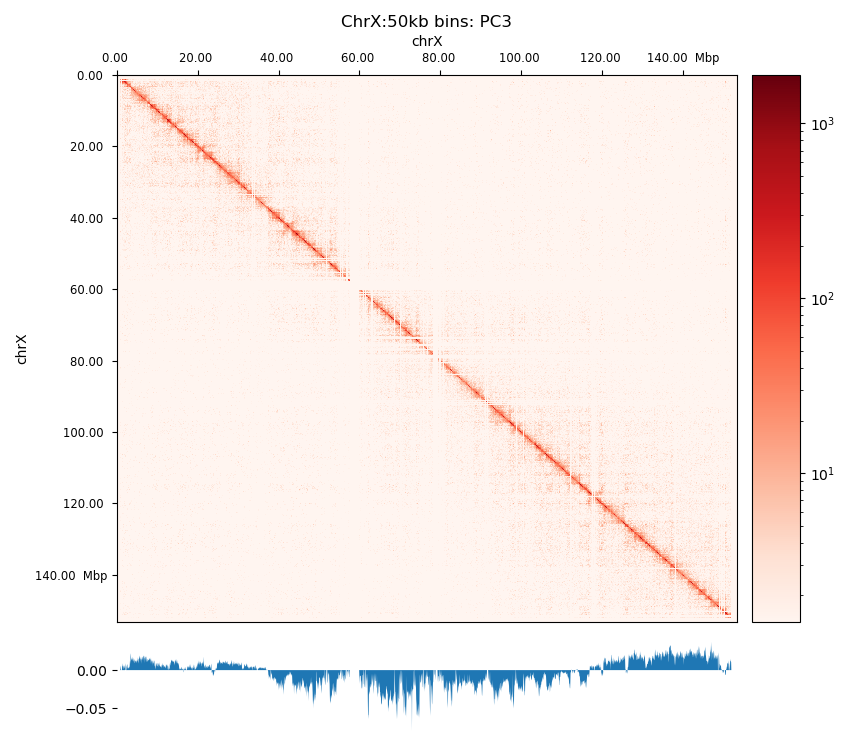

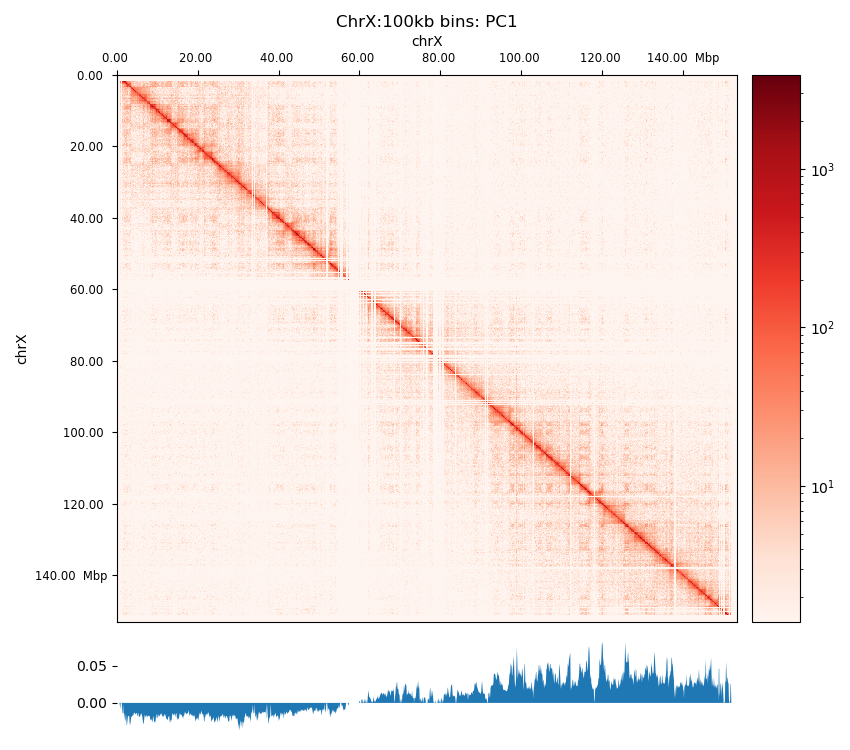

The PCA performed by hicPCA on the pooled samples at both 50kb and 100kb resolution yielded the first 3 principal components. For PC1 on both resolutions (Figure 8 (a), Figure 8 (d)) we observe only a single sign change which occurs at around 60 Mbp, the region of the centromere. It means the PCA has captured more variance between the chromosome arms than within them, making it uninformative about chromatin compartments. Upon visual inspection, it is clear that neither of the PC graphs capture the pattern of the interaction matrix by its change of sign. It seems the PCs capture variance from a bias that varies slowly and predictably along the chromosome. The first PC that is supposed to capture the compartments very suspiciously changes sign at the region of the centromere, a classic problem that could be solved by restricting the values from which the PC is calculated along the chromosome. Unimpressed, I rationalize that the option --extra-track to provide a gene track or histone coverage should not affect this result much. It should be provided as a phasing track to orient the eigenvector to positively correlate with gene density or histone marks, and could possibly muddle the compartments if not included. I followed HiCExplorer pipeline to plot and explore the matrices. At this point, I stoppped using HiCExplorer, as I assessed that a more flexible tool was needed.

Open2c ecosystem

Quality Control

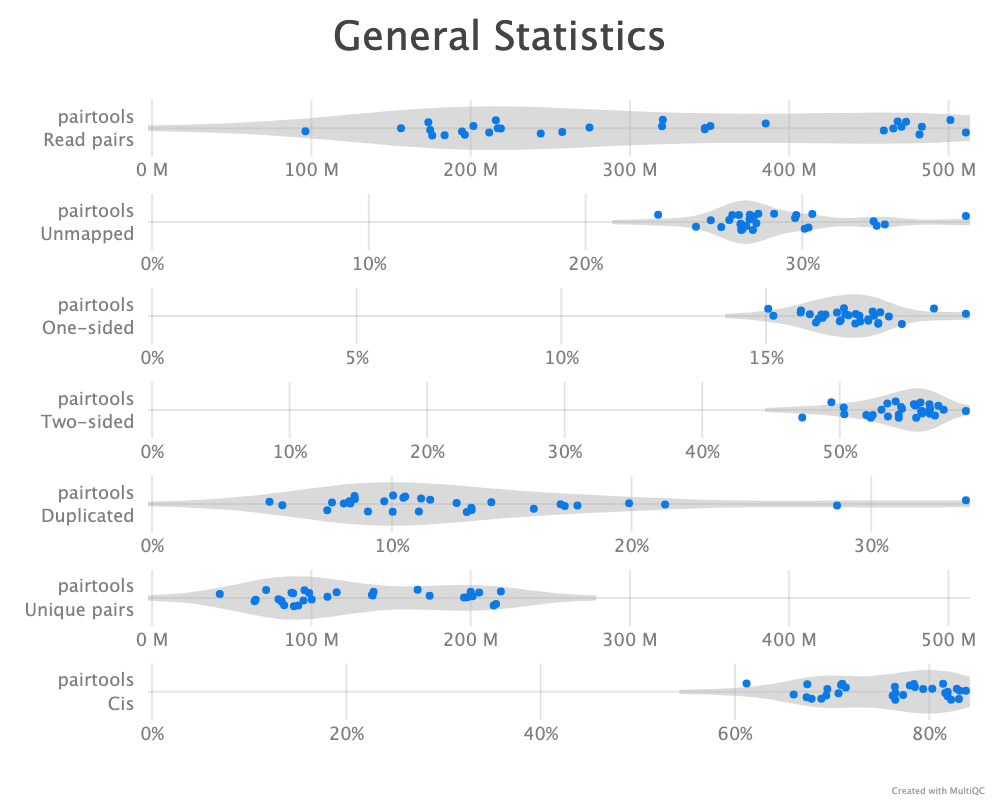

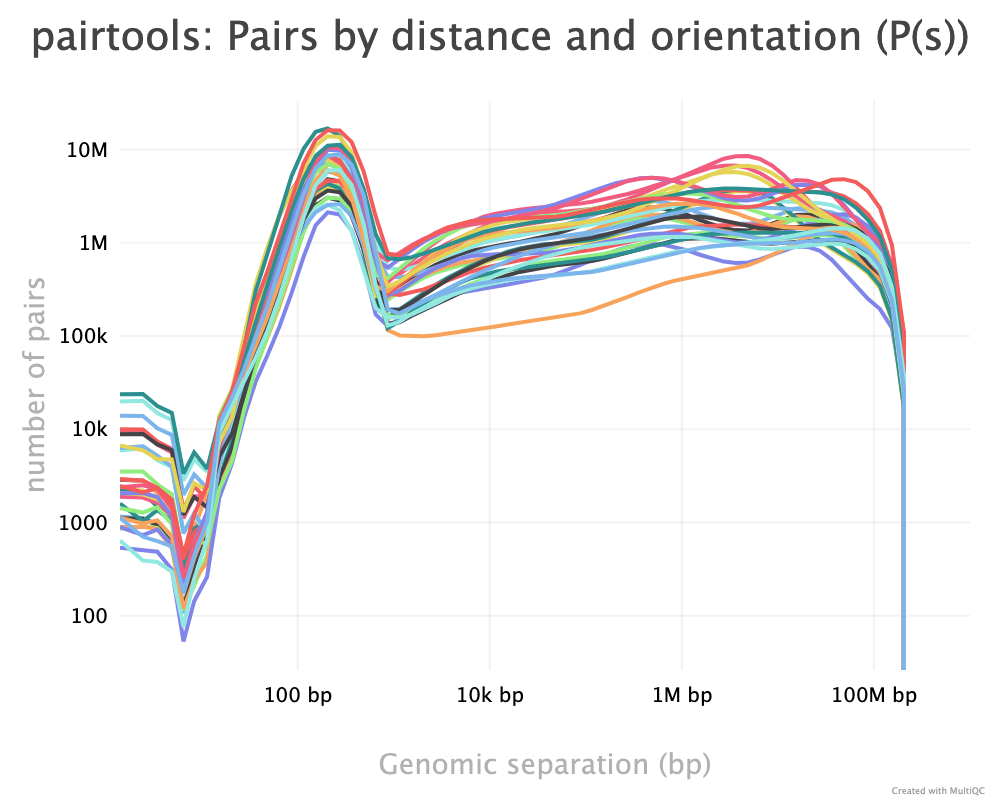

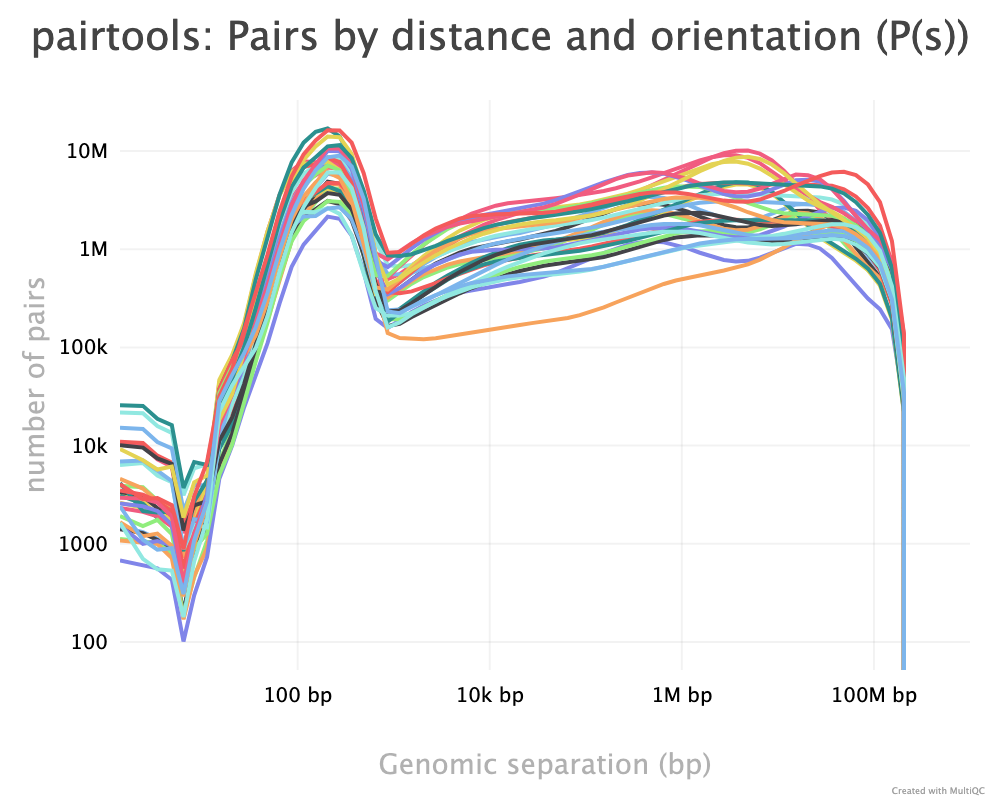

As described, the pairtools module in MultiQC was used to visualize results from pairtools stats for the two parsing runs, see Figure 9. The peak at around 250 bp is a techical artefact and should not be parsed into valid contacts.

--walks-policy mask

Comparing the multiQC report for each of the cell sources show similar distributions of unmapped (both sides unmapped), one-sided (one side mapped), two-sided (both sides mapped), and duplicated (w.r.t. total mapped) reads. The percentage of cis pairs w.r.t. mapped pairs is around 70% for all samples (Figure 9 (a)). The valid pairs also show similar distributions of pair types divided into 10 categories. The \(P(s)\) curve looks similar for all samples as well, peaking around 250 bp separation (Figure 9 (c)). The QC does not show any information about mapping quality of the reads. Note that the \(P(s)\) curve arise from pre-filtered pairs, meaning it provides information about the Hi-C library.

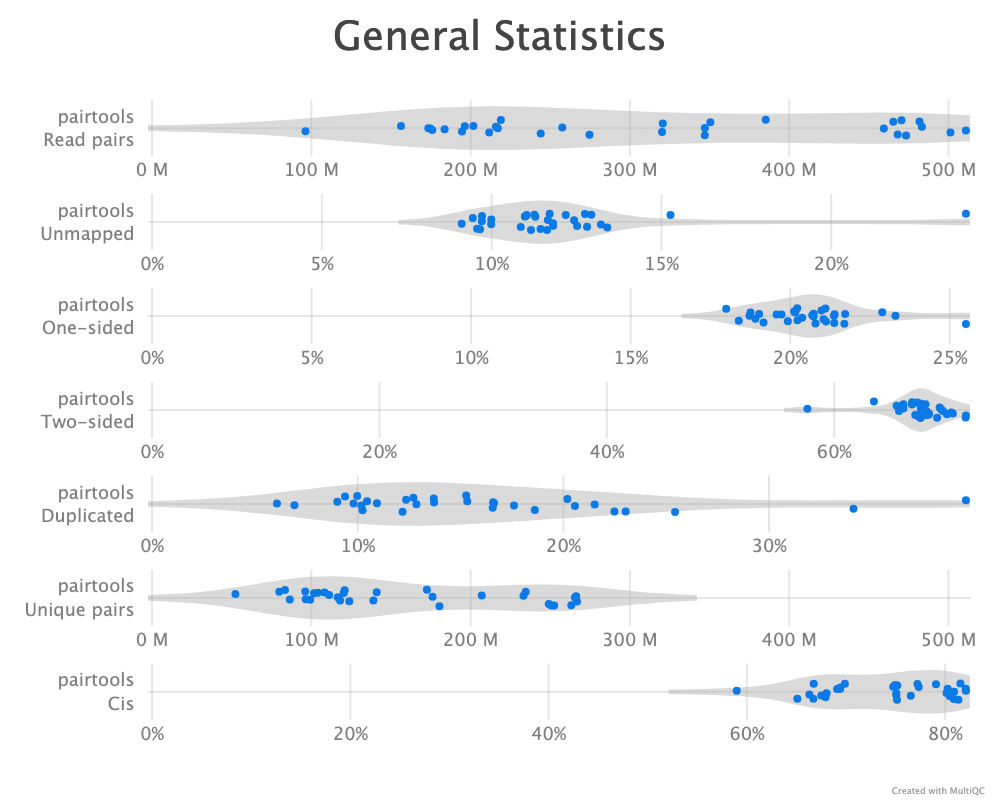

--walks-policy 5unique

Parsing alignments with the recommended walks-policy aproximately halves the percentage of unmapped reads, and one- and two-sided reads as well duplicated reads are slightly increased. Overall number of unique pairs are increased with more than 20% increase. The percentage of cis pairs are only decreased by a percentage point at most (Figure 9 (b)). Changing the walks policy does not alter the \(P(s)\) curve, meaning the parameter does not bias the parsing w.r.t. genomic separation.

pairtools stats run on all samples from the two walks-policies. Left (a+c): mask; right (b+d): 5unique. Generated by MultiQC (Ewels et al. 2016). Note: X-axes are not shared in the ‘Genereal Statistics’ plot.

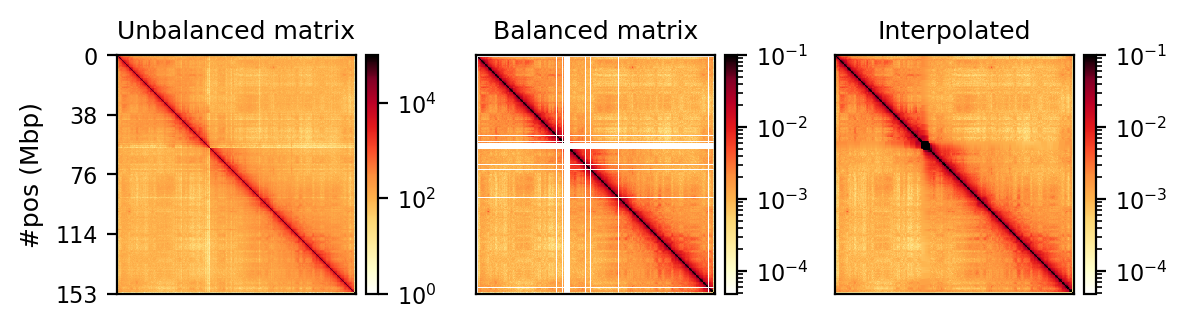

Correction

Matrix balancing did not show major improvement in the plaid pattern, as it already showed the expected pattern. It does, however, filter out bins that are deemed too low-count to be informative, for example peri-centromeric regions. The matrix was expected to be smoother after balancing (for chromosome-wide maps), as regions along a chromosome should only vary slowly in contact frequency with other regions as they are on a continouos molecule. Therefore, sharp contrasts represent a sudden drop in bin count (Figure 10 (a), raw) and should not be interpreted as devoid of interaction, but an indication that the data is not sufficient to interpret. It is then better to simply remove the bins instead of correcting, which will also amplify noise. Even with a high-quality Hi-C library we expect that all bins do not have the same coverage throughout(Lajoie, Dekker, and Kaplan 2015), as restriction enzymes do not bind equally to all regions of the genome, and therefore, some bins will be underrepresented as an artefact of binding/cutting efficieny of the restriction enzyme used.

We can try to mitigate the white lines of empty bins that now appear in the matrices. The coarsegrained and interpolated matrix is useful to make a good-looking interaction matrix, but is not that useful for analysis purposes. It might get easier to visually inspect the matrix, but it is not clear how well the interpolated matrix reflects the structure of the chromatin, and it is not transparent which regions are interpolated and which that are not. I find it purposeful for interpolation on high-resolution (zoomed-in) views (Figure 10 (b)) with small empty regions, but misleading for chromosome-wide maps, where typically the centromere and extremities of the chromosome have filtered-out bins. Interpolation is further discussed below.

The regions that are coarsegrained are small zero- or low-count bins which are averaged, effectively reducing the resolution of those regions until the count is sufficient. They get more frequent the longer genomic distance (the further we travel from the diagonal), and effectively enables us to get some intuition about the interactions. The coarsegrain, however, does not interpolate the NaNs created when filtering out whole bins in the balancing step (horisontal and vertical lines in Figure 10 (a) and Figure 10 (b); middle). This is done in a subsequent step by linearly interpolating the NaNs. Examining the interpolated matrix on full chrX (Figure 10 (a); right) gives the impression that the pericentromeric (at ~60 Mbp) region harbours a very strong compartment, but that is clearly an artefact of the interpolation on the very large empty region of the centromere, where the diagonal is somehow extended in a square. On the thinner lines, the interpolation seem to be more smooth, and barely noticable on the diagonal.

NaN histograms

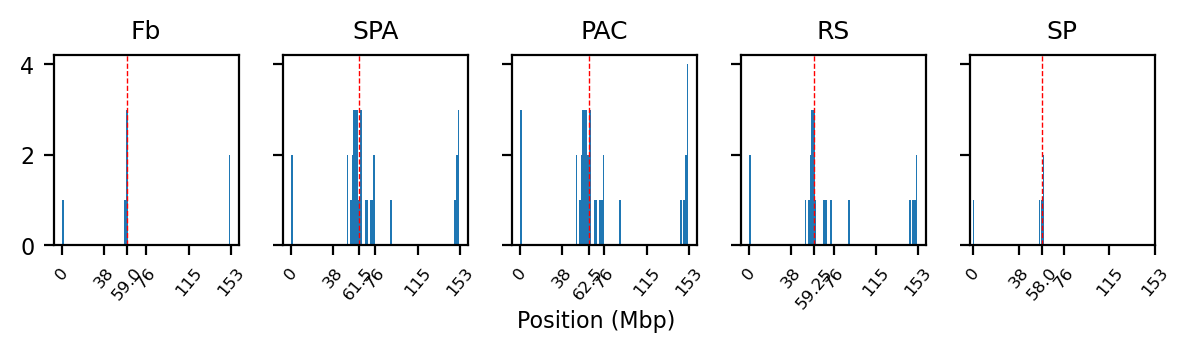

As expected, most of the low quality bins are located on the edges of the chromosome arms, especially the region around the centromere (Warren et al. 2020), as they contain many repetitive sequences. The low-quality bins are filtered out by the balancing algorithm, those bins are NaN in the Hi-C matrix. The median position of the NaN values (Figure 11) ranges between \(58\) and \(63.5\), which is within the estimate of the centromeric region of rhemac10 (the UCSC browser has a continuously unannotated region at chrX:57,500,000-60,200,000). The fact that the medians lie within the centromeric region on all cell sources shows both that the majority of the bad bins are in the (peri)centromeric region and there are approximately equally many on each side.

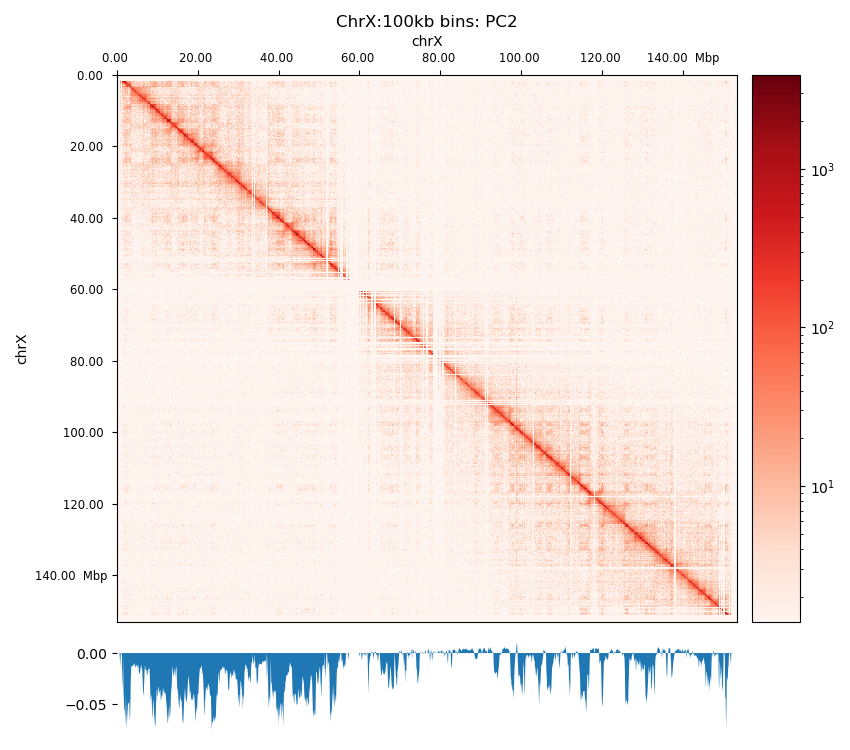

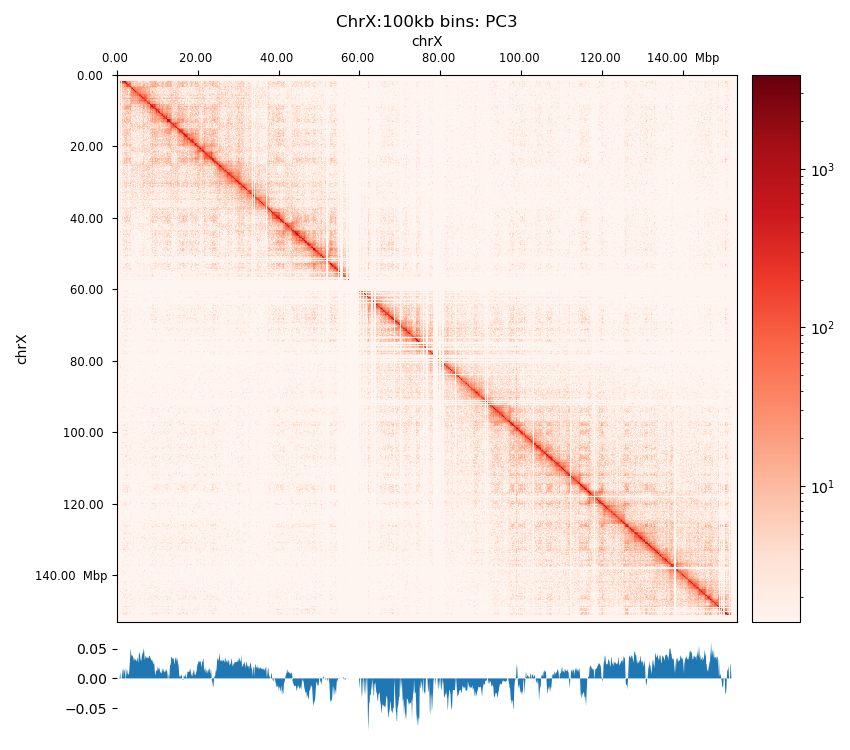

Compartments (Eigenvectors)

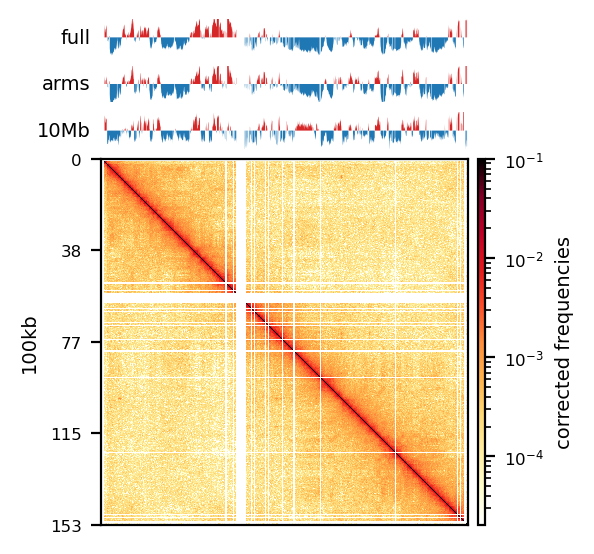

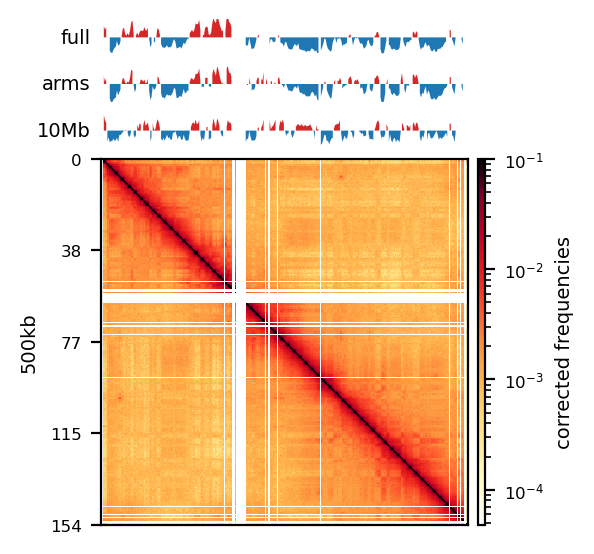

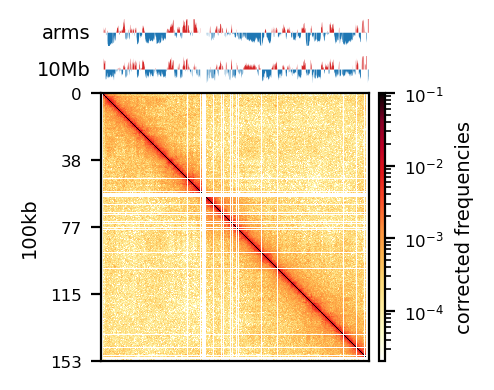

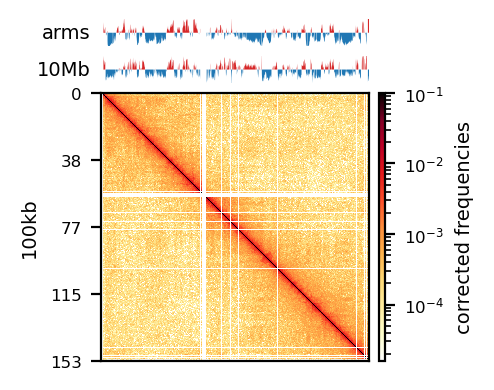

The three viewframes (Full, Arms, 10Mb) used for the calculation of the eigenvectors captured different variability in the data (Figure 12), and as expected, the inferred compartments (colored red on the E1 tracks) are more abundant and smaller with smaller viewframes. To determine how well each of the E1 tracks capture the pattern in the interaction matrix, we can overlay the matrix with the E1 sign-change and visually determine if the squares reflect the E1 sign change (Figure 12).

I decide that without more finescaled knowledge than the position of the centromeres, the arbitrary size of the 10 Mb windowed E1 can not fully be justified. That is, we could arbitrarily calculate any windowed E1 track. Also, Wang et al. (2019) concludes only for pachytene spermatocyte to show local interactions in the 10Mb viewframe (what they refer to as refined A/B-compartments), and all the other stages of spermatogenesis were consistent with the conventional A/B compartments. The reasonable thing to do is therefore to continue the analysis, focusing on the arms-restricted eigendecomposition. Nevertheless, we also keep refined compartments in the analysis.

Additionally, as I created coolers with two different sets of parsing parameters we will compare the resulting matrices and their compartments (Figure 13). As expected, we observe more empty bins in the Hi-C matrix when comparing the initial run (mask) to the recommended parameters (5unique), but otherwise, the interaction pattern is indestinguishable. The effect on the E1 is more noticable, where the absolute magnitude of the E1 values is generally smaller. There is, however, a small region that changes sign (from A to B) on the 10Mb-windowed (‘refined’) E1 track (Figure 13;c+d). This region is surrounded by added empty bins, which could mean that too many low quality pairs in mask were introducing bias and swapped the sign of E1. It is supported by the fact that the sign change only occured in refined E1, and that the sign after filtering weak pairs (\(mapq < 30\)) is consistent with the arms view. It supports my previous postulate that it is better to use a viewframe with explicit molecular meaning than one of an arbitrary window size. That said, the mapq threshold should really be determined taking both coverage and resolution into account. For our purposes, and with the arms view, the mapping- and parsing parameters do not seem to be too sensitive.

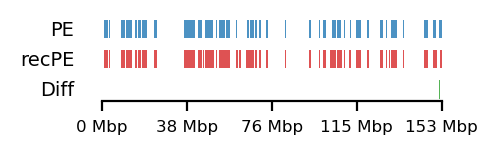

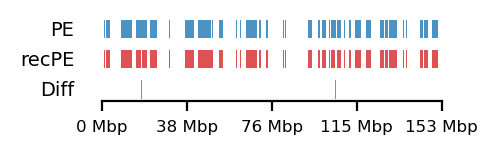

To emphasize the findings, the sets of A-compartments were compared between the two parsing runs, showing almost identical compartment calls. Additionally, the set difference was 8 bins between PE and recPE for round spermatid 100kb and 5 bins for fibroblast for arms viewframe (Figure 14; a+b, respectively). We observe a high number of differences around 76Mb for the refined compartments (10Mb) of round spermatid, which is consistent with the sign-flip of E1 values discussed earlier. Anything else would be surprising, as it is the same data, but visualized in a different way.

The observed difference between the sets can for our data be attributed to chance, but we cannot draw general conclusions about the parameters in general. I argue that the quality and size of the Hi-C library will influence sensitive to parsing parameters. In that case, the most flexible approach is still to follow the recommendations from cooler to report more pairs as valid contacts, and then create coolers with different mapq filters if issues are encountered.

Comparing Genomic Intervals

The comparison of genomic intervals was modified along the way, as we gained more knowledge and caught mistakes in the original implementation (especially of the proxomity test). Here, I only report the results of the final version of the software, but the modifications and implications hereof are discussed in Section 4.

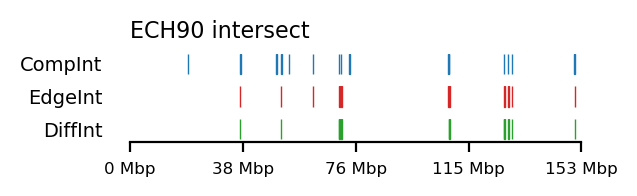

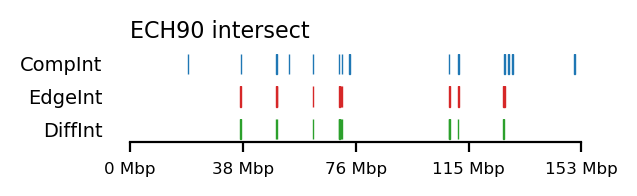

Compartment Edges (transition zones)

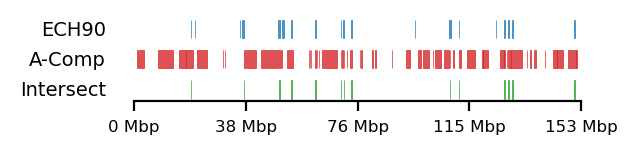

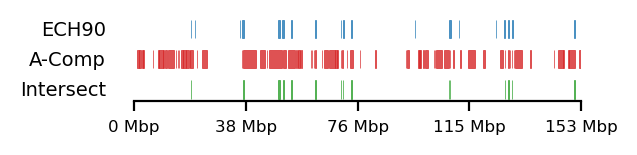

We compare how the ECH90 regions fit when queried on top of the A-compartments and equivalently for the edges, for fibroblasts and round spermatids at 100kb resolution. When queried against the edges instead, the the total set size is reduced to less than 50%. Interestingly, some of the intersections between A-compartments and ECH90 remain, and new ones appear as we move to the outside edge of the compartment (Figure 15). This indicates that most, but not all, of the intersection between ECH90 regions and the A-compartments are within 100kb of the compartment edge, and additional overlap is gained if we define a transition zone on the outside of the edge as well. To visualize this (outside) edge enrichment, we find the set difference of the ECH-intersection to compartments and edges, respectively (Figure 16), thus removing all the ‘inside’ edges. We observe that in almost all of the of the regions of \(ECH \cap Comp\) are accompanied by an edge also intersecting ECH (\(ECH \cap Edge\)), localized where the Diff track aligns (within 100kb) with both \(CompInt\) and \(EdgeInt\).

I apply both the proximity and Jaccard test to see how well the observations could be explained by chance. For completeness, the tests are performed for all cell types, but only use 100kb resolution and arms viewframe. We observe that both fibroblast and round spermatids have significant overlap (Jaccard p-values \(0.012\) and \(0.010\), respectively), meaning the edge zone have more intersection with the ECH regions than expected by chance. None of the samples passed the proximity test, meaning the non-overlapping segments were no more proximal than expected by chance. A significant proximity test (when performed on the edges) might provide information about the potential of expanding or moving the transition window. That is, if the non-overlapping regions are very proximal, a larger (or shifted) window to only capture the 200kb region outside of the edge might be favourable. With the very small amount of observed proximity (high p-values), we might consider shrinking the windows.

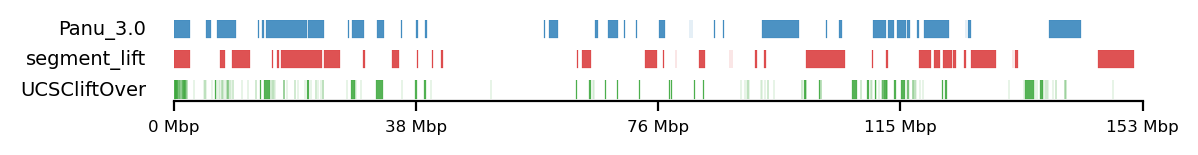

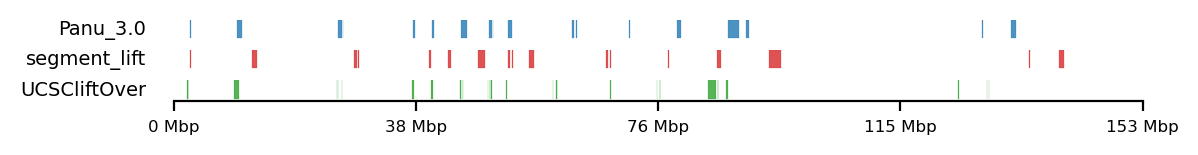

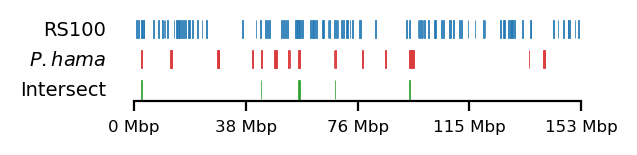

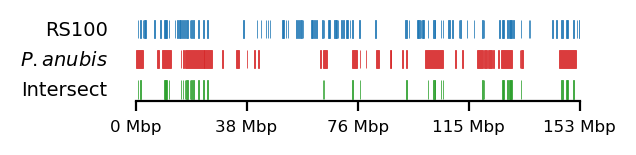

Testing against baboon regions under strong selection

The data for this analysis was provided by Kasper Munch in bed-like format, mapped to panu_3.0 (PapAnu4) assembly. The intervals define genomic regions in a hybrid/migrating population of baboon where strong negative selection acts against minor parent ancestry (Sørensen et al. 2023). The segments had to be lifted to rheMac10 coordinates to be able to correlate the two sets of intervals. The original UCSC-liftOver (Hinrichs 2006) is very strict and does not try to conserve segments in favor of accuracy e.g. inversions or small indels, which results in highly fragmented regions when lifted to another assembly, if the segments are not continouos on the new assembly. For our analysis, it is not the exact genomic position or order of sub-genic regions that are important, but rather, the start and end coordinates of each segment are quantified. To favor preservation of segments, we use segment_liftover (Gao, Huang, and Baudis 2018), resulting in much more similar segments to the original (Figure 17). As no chain file from panu_3.0 to Mmul_10 was available, we used panu_2.0 as intermediate.