import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import matplotlib_inline

import pymc as pm

import arviz as az

import pytensor.tensor as pt

from scipy.stats import norm, t

from pprint import pprint

## Use a custom style for the plots

plt.style.use('smaller.mplstyle')

matplotlib_inline.backend_inline.set_matplotlib_formats('retina')

#%config InlineBackend.figure_formats = ['retina']Comparing GRW models for A/B Clustering

Quantifying differences in GRW models with varying parameters

Here, we compare probabilistic modeling of eigenvectors into A/B compartment calls for Hi-C data.

We compare different models, all using the same underlying structure, but varying smaller aspects of the model.

Goals

To quantify differences in model performance, we use the following metrics:

WAIC: Widely Applicable Information Criterion, a measure of model fit that penalizes complexity.

LOO: Leave-One-Out Cross-Validation, a method for estimating the predictive accuracy of a model.

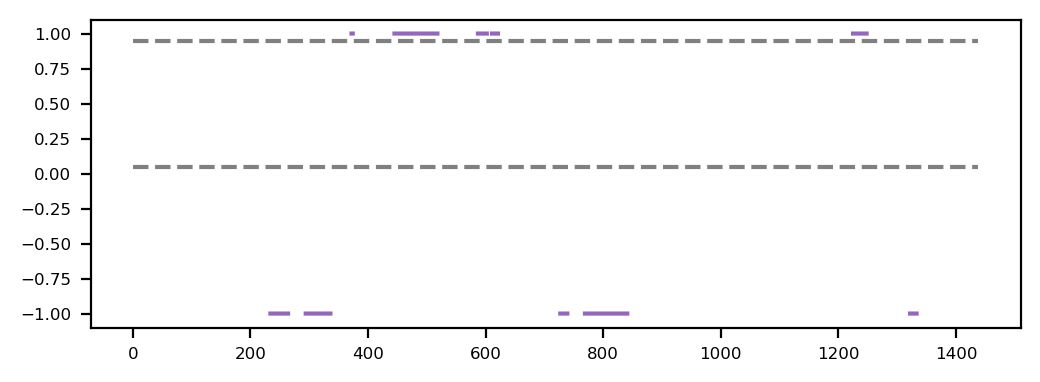

We also try to assign uncertainty to the compartment calls, ultimately defining credible intervals for the compartment transitions.

Imports and setup

Load/show data

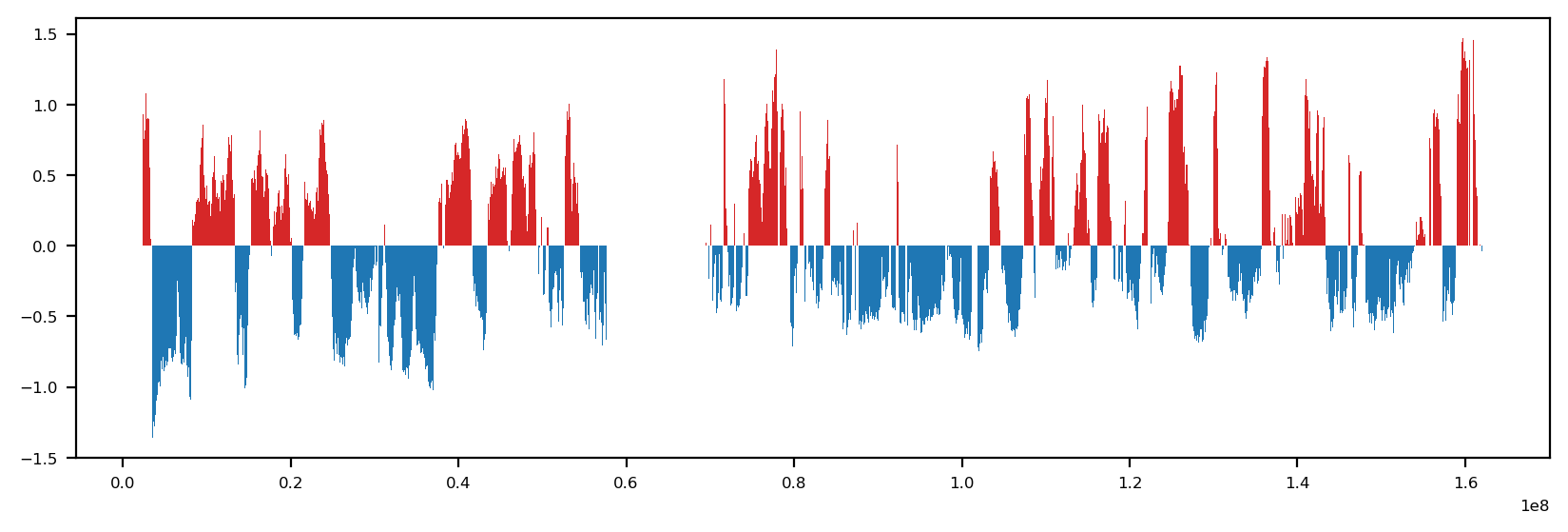

Here, we load the Hi-C contact matrix and the first eigenvector (E1) of the contact matrix. The E1 is used as a predictor for compartment assignment.

The eigendecomposition of the Hi-C matrix was performed with the workflow in the main folder (workflow.py), using the Open2C ecosystem

# Load the data

resolution = 100000

y = pd.Series(pd.read_csv(f"../data/eigs/sperm.eigs.{resolution}.cis.vecs.tsv", sep="\t")['E1'].values.flatten())

x = pd.Series(np.arange(0,y.shape[0])*resolution)

# Make a DataFrame object

df = pd.DataFrame({"start": x, "e1": y})

# Plot the data

fig, ax = plt.subplots(figsize=(10, 3))

ax.fill_between(df.start, df.e1, where=df.e1 > 0, color='tab:red', ec='None', label='E1', step='pre')

ax.fill_between(df.start, df.e1, where=df.e1 < 0, color='tab:blue', ec='None', label='E1', step='pre')

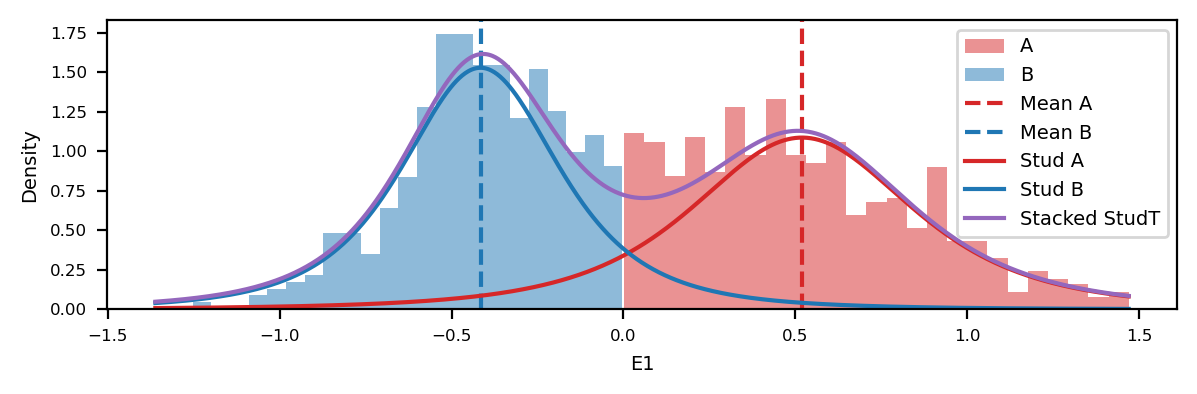

df.dropna(inplace=True)# Histogram of distribution of E1 values (A and B)

x = df["start"].values

y = df["e1"].values

dist_x_values = np.linspace(y.min(), y.max(), 1000)

a_dist = t.pdf(dist_x_values, loc=np.mean(y[y>0]), scale=y[y>0].std(), df=3)

b_dist = t.pdf(dist_x_values, loc=np.mean(y[y<0]), scale=y[y<0].std(), df=3)

f, ax = plt.subplots()

# A compartments

ax.hist(y[y>0], bins=25, color="tab:red", alpha=0.5, label="A", density=True)

# B compartments

ax.hist(y[y<0], bins=25, color="tab:blue", alpha=0.5, label="B", density=True)

# Mean values (vline)

ax.axvline(y[y>0].mean(), color="tab:red", linestyle="--", label="Mean A")

ax.axvline(y[y<0].mean(), color="tab:blue", linestyle="--", label="Mean B")

# Plot the normal distributions

ax.plot(dist_x_values, a_dist, color="tab:red", label="Stud A")

ax.plot(dist_x_values, b_dist, color="tab:blue", label="Stud B")

ax.plot(dist_x_values, a_dist+b_dist, color="tab:purple", label="Stacked StudT")

# Final touches

plt.xlabel("E1")

plt.ylabel("Density")

plt.legend(loc='best')

plt.tight_layout()Here, the observed distributions are compared to StudenT distributions of 3 degrees of freedom and the same mean and standard deviation as the observed data. The Student’s t-distribution is used because it is more robust with its heavier tails than the normal.

Models

Here, we expand on the results from 03_PyMC_GRW_logit.ipynb, and use the same model structure, but with different priors and likelihoods. Overall, the following thoughts are implemented:

- We assume that the eigenvectors can be drawn from a mixture of two distributions, one for each compartment (A and B). It could be any mixture, but the means should be negative for B and positive for A. We use either to model

mu:- Gaussians, as a simple starting point, or

- Student’s t-distributions, as a heavier-tailed alternative.

- The mixtures has to be ordered in PyMC to ensure that the A compartment is always positive and the B compartment is always negative.

- The mixture components have their own (learned) standard deviation,

sigma. - The mixture components use

muandsigma(each of shape 2) in either a,- Normal distribution, or

- Student’s t-distribution, with 10 degrees of freedom.

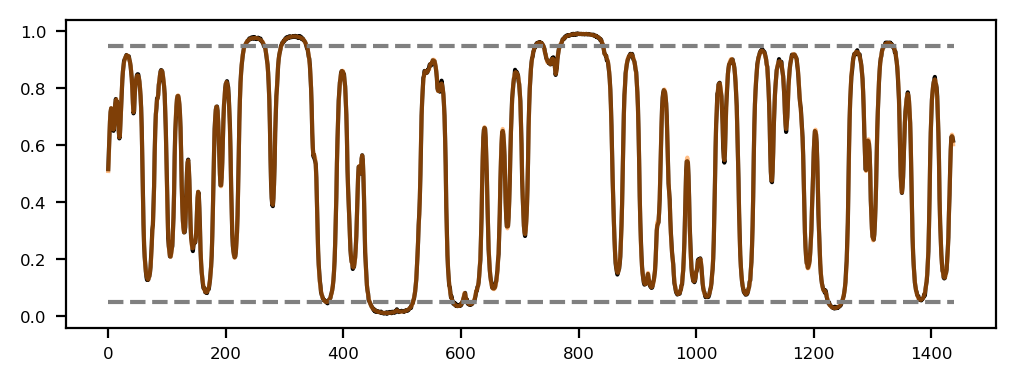

- We model the mixture weight as a non-centered (Gaussian) random walk prior, as a method of probabilistic smoothing of the PCA compartment calls.

epsis the (learned) step size of the random walk, and- either Normal or Student’s t-distributed

grw_sigmais the (learned) standard deviation of the random walk- either HalfNormal or HalfStudentT-distributed

- The logit-space (of the mixture weight) is then modeled deterministically as the cummulative sum of the random walk steps.

- The logit-space is then transformed to the probability space by squishing it through a sigmoid function.

- \(\hat{y}\) (

y_hat) is drawing the observations from the mixture distribution, using the mixture weight and the two components (the learned distributions of E1 values for A and B-compartments).

Model 1: T-dist in logit space, T-dist in mixture components

import pytensor.tensor as pt

with pm.Model(coords={"pos": df.index.values}) as latentT_Tmix_model:

e1 = pm.Data("e1", df.e1.values, dims="pos")

# Ordered parameters for the means of the two components

# Note: The ordered transform ensures that mu_a < mu_b

mu = pm.Normal("mu", mu=[-0.5, 0.5], sigma=0.3,

transform=pm.distributions.transforms.ordered, # IMPORTANT

shape=2,

)

sigma = pm.HalfNormal("sigma", 0.3, shape=2)

# GRW over logit space; non-centered reparameterization

grw_sigma = pm.HalfNormal("grw_sigma", 0.05)

# StudentT distribution for eps (got alot of divergences with nu=3)

eps = pm.StudentT("eps", nu=10, mu=0.0, sigma=1.0, dims="pos")

# Cumulative sum to create a Gaussian Random Walk

logit_w = pm.Deterministic("logit_w", pt.cumsum(eps * grw_sigma), dims="pos")

w = pm.Deterministic("w", pm.math.sigmoid(logit_w), dims="pos")

# Components of the mixture model

components = pm.StudentT.dist(nu=10, mu=mu, sigma=sigma, shape=2)

# Mixture model

# The observed data is modeled as a mixture of the two components

y_hat = pm.Mixture("y_hat", w=pm.math.stack([w,1-w], axis=1), comp_dists=components, observed=e1, dims='pos')Model 1: T-dist in logit space, Normal in mixture components

with pm.Model(coords={"pos": df.index.values}) as latentT_Nmix_model:

e1 = pm.Data("e1", df.e1.values, dims="pos")

# Ordered parameters for the means of the two components

# Note: The ordered transform ensures that mu_a < mu_b

mu = pm.Normal("mu", mu=[-0.5, 0.5], sigma=0.3,

transform=pm.distributions.transforms.ordered, # IMPORTANT

shape=2,

)

sigma = pm.HalfNormal("sigma", 0.3, shape=2)

# GRW over logit space; non-centered reparameterization

grw_sigma = pm.HalfNormal("grw_sigma", 0.05)

# StudentT distribution for eps (got alot of divergences with nu=3)

eps = pm.StudentT("eps", nu=10, mu=0.0, sigma=1.0, dims="pos")

# Cumulative sum to create a Gaussian Random Walk

logit_w = pm.Deterministic("logit_w", pt.cumsum(eps * grw_sigma), dims="pos")

w = pm.Deterministic("w", pm.math.sigmoid(logit_w), dims="pos")

# Components of the mixture model

components = pm.StudentT.dist(nu=10, mu=mu, sigma=sigma, shape=2)

# Mixture model

# The observed data is modeled as a mixture of the two components

y_hat = pm.Mixture("y_hat", w=pm.math.stack([w,1-w], axis=1), comp_dists=components, observed=e1, dims='pos')Parameter sweep configuration

Here is an attempt to sweep through parameters in a reproducible and deterministic way. The idea is to use a grid search over the parameters, and then run the models in parallel, and finally collect the results and analyze them.

First, let’s create a function that create a grid of parameters to sweep over using itertools.

Create parameter grid

import itertools as it

def create_model_grid():

"""

Create a grid of models for the different configurations as defined below.

Name the model according to the configuration for easier identification:

- comp_dist: Distribution of the components (Normal or StudentT)

- eps_dist: Distribution of the GRW steps (Normal or StudentT)

- grw_sigma_dist: Distribution of the GRW noise (HalfNormal or HalfStudentT)

name = f"{comp_dist}_{eps_dist}_{grw_sigma_dist}"

- If any distribution is StudentT, append the nu parameter to the name.

"""

def config_namer(comp_dist, comp_kwargs, eps_dist, eps_kwargs, grw_sigma_dist, grw_sigma_kwargs):

abbr = {

'Normal': 'N',

'StudentT': 'T',

'HalfNormal': 'HN',

'HalfStudentT': 'HT'

}

name = f"{abbr[comp_dist]}_{abbr[eps_dist]}_{abbr[grw_sigma_dist]}"

if comp_dist == 'StudentT':

name += f"_{comp_kwargs['nu']}"

if eps_dist == 'StudentT':

name += f"_{eps_kwargs['nu']}"

if grw_sigma_dist == 'HalfStudentT':

name += f"_{grw_sigma_kwargs['nu']}"

return name

comp_kwargs_list = [

('Normal', {}),

('StudentT', {'nu': 10}),

('StudentT', {'nu': 5})

]

eps_kwargs_list = [

('Normal', {'mu': 0.0, 'sigma': 1.0}),

('StudentT', {'nu': 10, 'mu': 0.0, 'sigma': 1.0}),

('StudentT', {'nu': 5, 'mu': 0.0, 'sigma': 0.5})

]

grw_sigma_kwargs_list = [

('HalfNormal', {'sigma': 0.05}),

('HalfStudentT', {'nu': 10, 'sigma': 0.05}),

('HalfStudentT', {'nu': 5, 'sigma': 0.01})

]

# Prior configurations for E1 mixture

mu_mu = [-0.5, 0.5]

mu_sigma = 0.3

sigma = 0.3

grid = it.product(comp_kwargs_list, eps_kwargs_list, grw_sigma_kwargs_list)

configs = []

for (comp_dist, comp_kwargs), (eps_dist, eps_kwargs), (grw_sigma_dist, grw_sigma_kwargs) in grid:

name = config_namer(

comp_dist, comp_kwargs,

eps_dist, eps_kwargs,

grw_sigma_dist, grw_sigma_kwargs

)

config = {

'name': name,

'comp_dist': comp_dist,

'comp_kwargs': comp_kwargs,

'eps_dist': eps_dist,

'eps_kwargs': eps_kwargs,

'grw_sigma_dist': grw_sigma_dist,

'grw_sigma_kwargs': grw_sigma_kwargs,

'mu_mu': mu_mu,

'mu_sigma': mu_sigma,

'sigma': sigma

}

configs.append(config)

return configs

from pprint import pprint

import json

# Instantiate the model grid

configs = create_model_grid()

pprint(configs)

# Save the model grid to a JSON file

with open("../results/model_grid.json", "w") as f:

json.dump(configs, f, indent=4)[{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_N_HN',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_N_HT_10',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_N_HT_5',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_T_HN_10',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_T_HT_10_10',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_T_HT_10_5',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_T_HN_5',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_T_HT_5_10',

'sigma': 0.3},

{'comp_dist': 'Normal',

'comp_kwargs': {},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'N_T_HT_5_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_N_HN_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

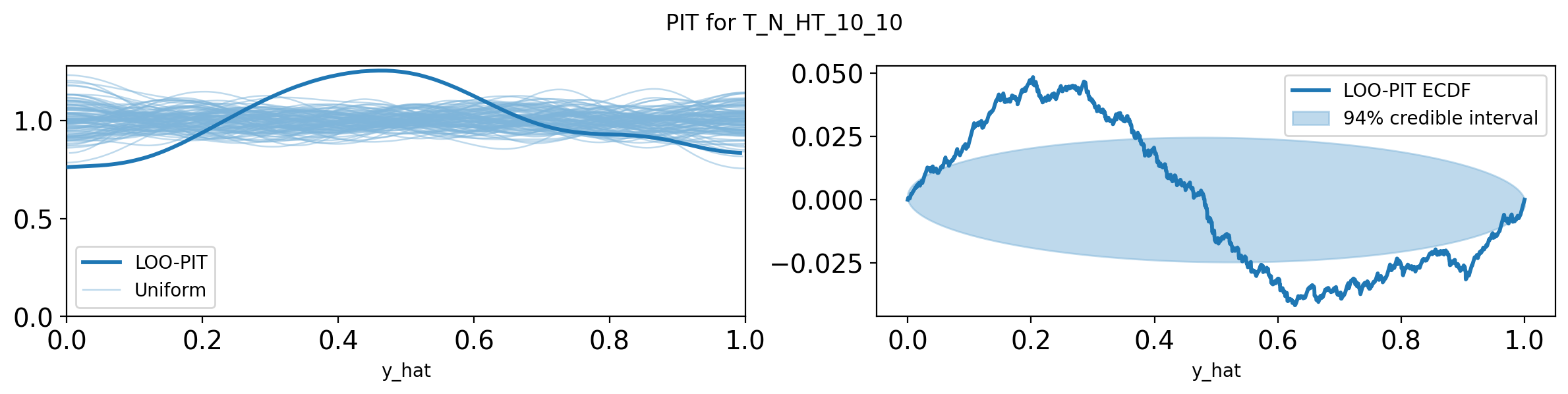

'name': 'T_N_HT_10_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

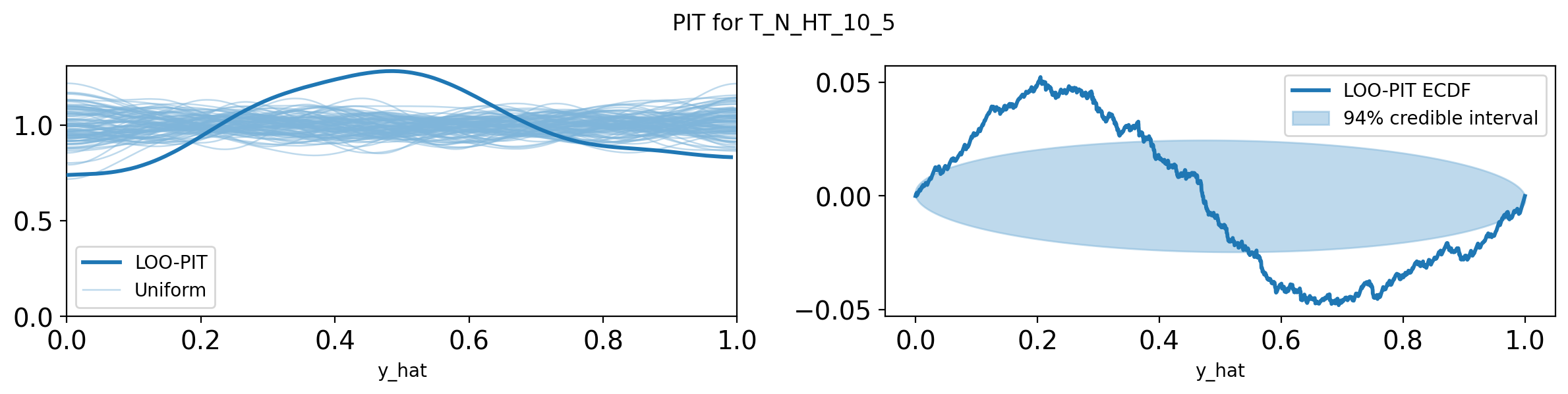

'name': 'T_N_HT_10_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HN_10_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HT_10_10_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HT_10_10_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HN_10_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

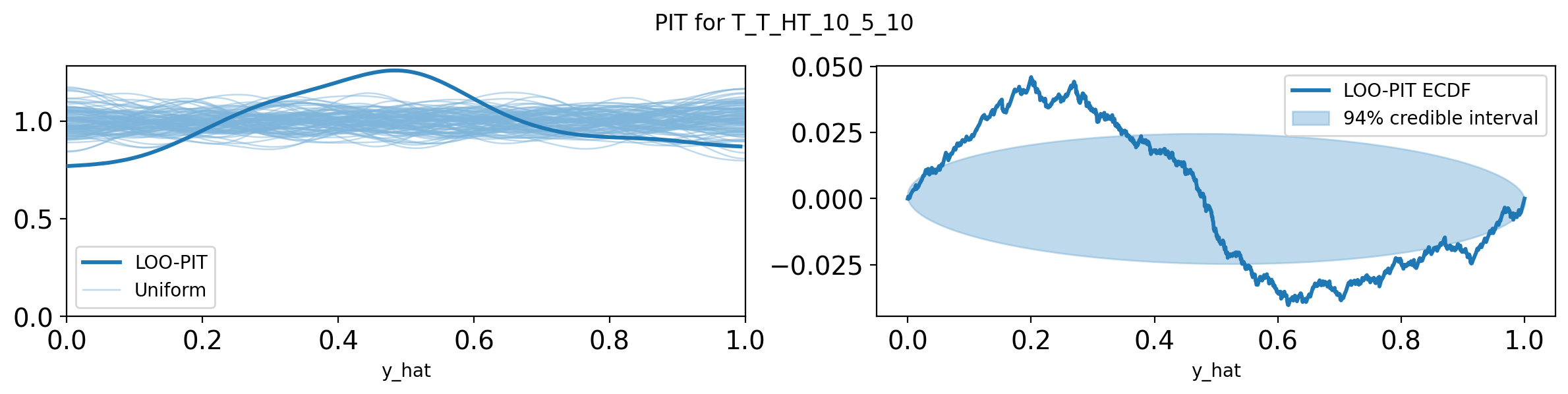

'name': 'T_T_HT_10_5_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 10},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

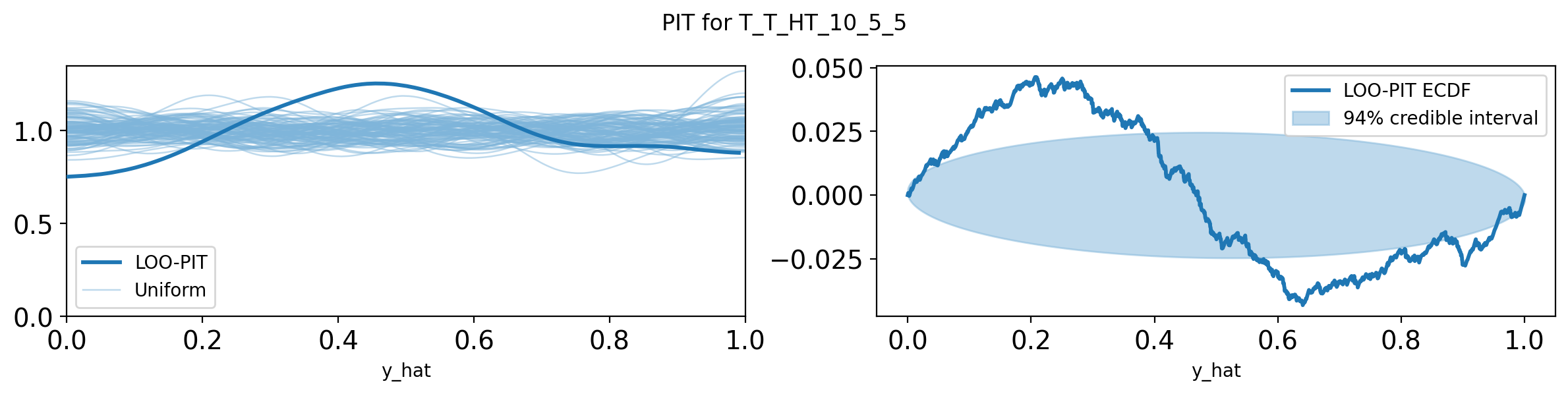

'name': 'T_T_HT_10_5_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_N_HN_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_N_HT_5_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'Normal',

'eps_kwargs': {'mu': 0.0, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_N_HT_5_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HN_5_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HT_5_10_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 10, 'sigma': 1.0},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HT_5_10_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfNormal',

'grw_sigma_kwargs': {'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HN_5_5',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 10, 'sigma': 0.05},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HT_5_5_10',

'sigma': 0.3},

{'comp_dist': 'StudentT',

'comp_kwargs': {'nu': 5},

'eps_dist': 'StudentT',

'eps_kwargs': {'mu': 0.0, 'nu': 5, 'sigma': 0.5},

'grw_sigma_dist': 'HalfStudentT',

'grw_sigma_kwargs': {'nu': 5, 'sigma': 0.01},

'mu_mu': [-0.5, 0.5],

'mu_sigma': 0.3,

'name': 'T_T_HT_5_5_5',

'sigma': 0.3}]Create model builder function

Then, we want to create a function (model_builder) that takes the parameters and returns a PyMC model. This function will be used to build the models in parallel.

NOTE: accidentally, I switched the order of mixture weights, so the posterior mean of

wnow determines the probability of being in a B compartment (coming from the distribution with negative mean)

def build_model_from_config(df, cfg):

"""

Build a PyMC model based on the provided configuration dictionary.

"""

with pm.Model(coords={"pos": df.index.values}) as model:

e1 = pm.Data("e1", df.e1.values, dims="pos")

mu = pm.Normal("mu", mu=cfg['mu_mu'], sigma=cfg['mu_sigma'],

transform=pm.distributions.transforms.ordered, shape=2)

sigma = pm.HalfNormal("sigma", sigma=cfg['sigma'], shape=2)

# components: Normal or StudentT

if cfg['comp_dist'] == "Normal":

components = pm.Normal.dist(mu=mu, sigma=sigma, shape=2)

else:

components = pm.StudentT.dist(mu=mu, sigma=sigma, **cfg['comp_kwargs'], shape=2)

# grw_sigma: HalfNormal or HalfStudentT

if cfg['grw_sigma_dist'] == "HalfNormal":

grw_sigma = pm.HalfNormal("grw_sigma", **cfg['grw_sigma_kwargs'])

else:

grw_sigma = pm.HalfStudentT("grw_sigma", **cfg['grw_sigma_kwargs'])

# eps: Normal or StudentT

if cfg['eps_dist'] == "Normal":

eps = pm.Normal("eps", dims="pos", **cfg['eps_kwargs'])

else:

eps = pm.StudentT("eps", dims="pos", **cfg['eps_kwargs'])

logit_w = pm.Deterministic("logit_w", pt.cumsum(eps * grw_sigma), dims="pos")

w = pm.Deterministic("w", pm.math.sigmoid(logit_w), dims="pos")

y_hat = pm.Mixture("y_hat", w=pt.stack([w, 1-w], axis=1), comp_dists=components,

observed=e1, dims='pos')

return modelSampling loop

Now, we create the sampling loop that should run the models in sequence.

We will name the models by joining the list [number, comp_dist, eps_dist, grw_sigma_dist][eps_nu, comp_nu].

All the traces (InferenceData) will be saved in a dictionary, model_traces.

Sample the models

from datetime import datetime

def log(msg, path=".04_PyMC_GRW_compare.log", overwrite=False):

print(f"LOG: {msg}")

if overwrite:

with open(path, "w") as f:

f.write(msg + "\n")

else:

with open(path, "a") as f:

f.write(msg + "\n")

# Reset the log file

log("Resetting log... \n", overwrite=True)

model_traces = {}

for i, cfg in enumerate(configs):

name = cfg['name']

if name in model_traces:

continue

else:

model_traces[name] = {'model': None, 'trace': None, 'scores': None}

log(f"Sampling {name}...")

try:

start = datetime.now()

log(f"Started at {start.strftime('%H:%M:%S')}")

model_traces[name]['model'] = build_model_from_config(df, cfg)

model = model_traces[name]['model']

trace = pm.sample(draws=1000, tune=1000, chains=7, cores=7, progressbar=False, model=model)

model_traces[name]['trace'] = trace

log(f"--> Took {(datetime.now()-start).total_seconds()} seconds!\n")

except Exception as e:

log(f"Model {name} failed: {e}\n")LOG: Resetting log...

LOG: Sampling N_N_HN...

LOG: Started at 22:36:58Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 302 seconds.LOG: --> Took 310.351568 seconds!

LOG: Sampling N_N_HT_10...

LOG: Started at 22:42:09Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 107 seconds.

There were 6980 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 118.167902 seconds!

LOG: Sampling N_N_HT_5...

LOG: Started at 22:44:07Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 134 seconds.

There were 6851 divergences after tuning. Increase `target_accept` or reparameterize.

Chain 6 reached the maximum tree depth. Increase `max_treedepth`, increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 143.521094 seconds!

LOG: Sampling N_T_HN_10...

LOG: Started at 22:46:30Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 320 seconds.LOG: --> Took 326.008741 seconds!

LOG: Sampling N_T_HT_10_10...

LOG: Started at 22:51:56Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 157 seconds.

There were 6843 divergences after tuning. Increase `target_accept` or reparameterize.

Chain 6 reached the maximum tree depth. Increase `max_treedepth`, increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 166.726683 seconds!

LOG: Sampling N_T_HT_10_5...

LOG: Started at 22:54:43Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 76 seconds.

There were 6997 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 85.394018 seconds!

LOG: Sampling N_T_HN_5...

LOG: Started at 22:56:08Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 229 seconds.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 240.948306 seconds!

LOG: Sampling N_T_HT_5_10...

LOG: Started at 23:00:09Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 164 seconds.

There were 6873 divergences after tuning. Increase `target_accept` or reparameterize.

Chain 4 reached the maximum tree depth. Increase `max_treedepth`, increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 174.36611 seconds!

LOG: Sampling N_T_HT_5_5...

LOG: Started at 23:03:04Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 91 seconds.

There were 6999 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 101.124336 seconds!

LOG: Sampling T_N_HN_10...

LOG: Started at 23:04:45Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 359 seconds.LOG: --> Took 365.130255 seconds!

LOG: Sampling T_N_HT_10_10...

LOG: Started at 23:10:50Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 122 seconds.

There were 6989 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 132.235343 seconds!

LOG: Sampling T_N_HT_10_5...

LOG: Started at 23:13:02Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 171 seconds.

There were 6805 divergences after tuning. Increase `target_accept` or reparameterize.

Chain 0 reached the maximum tree depth. Increase `max_treedepth`, increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 181.227185 seconds!

LOG: Sampling T_T_HN_10_10...

LOG: Started at 23:16:03Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 366 seconds.LOG: --> Took 372.410547 seconds!

LOG: Sampling T_T_HT_10_10_10...

LOG: Started at 23:22:16Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 103 seconds.

There were 6999 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 113.559086 seconds!

LOG: Sampling T_T_HT_10_10_5...

LOG: Started at 23:24:09Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 164 seconds.

There were 6969 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 173.920995 seconds!

LOG: Sampling T_T_HN_10_5...

LOG: Started at 23:27:03Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 241 seconds.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 246.952836 seconds!

LOG: Sampling T_T_HT_10_5_10...

LOG: Started at 23:31:10Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 120 seconds.

There were 6995 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 129.991988 seconds!

LOG: Sampling T_T_HT_10_5_5...

LOG: Started at 23:33:20Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 81 seconds.

There were 7000 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 91.027394 seconds!

LOG: Sampling T_N_HN_5...

LOG: Started at 23:34:51Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 362 seconds.LOG: --> Took 374.295864 seconds!

LOG: Sampling T_N_HT_5_10...

LOG: Started at 23:41:06Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 149 seconds.

There were 6938 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 158.387342 seconds!

LOG: Sampling T_N_HT_5_5...

LOG: Started at 23:43:44Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 95 seconds.

There were 6993 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 105.102596 seconds!

LOG: Sampling T_T_HN_5_10...

LOG: Started at 23:45:29Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 367 seconds.LOG: --> Took 372.963417 seconds!

LOG: Sampling T_T_HT_5_10_10...

LOG: Started at 23:51:42Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 110 seconds.

There were 6978 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 120.264809 seconds!

LOG: Sampling T_T_HT_5_10_5...

LOG: Started at 23:53:42Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 92 seconds.

There were 7000 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 102.415237 seconds!

LOG: Sampling T_T_HN_5_5...

LOG: Started at 23:55:25Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 229 seconds.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 235.065423 seconds!

LOG: Sampling T_T_HT_5_5_10...

LOG: Started at 23:59:20Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 115 seconds.

There were 6980 divergences after tuning. Increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 125.419846 seconds!

LOG: Sampling T_T_HT_5_5_5...

LOG: Started at 00:01:25Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (7 chains in 7 jobs)

NUTS: [mu, sigma, grw_sigma, eps]

Sampling 7 chains for 1_000 tune and 1_000 draw iterations (7_000 + 7_000 draws total) took 148 seconds.

There were 6826 divergences after tuning. Increase `target_accept` or reparameterize.

Chain 4 reached the maximum tree depth. Increase `max_treedepth`, increase `target_accept` or reparameterize.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for detailsLOG: --> Took 158.14628 seconds!

model_traces.items()dict_items([('N_N_HN', {'model': <pymc.model.core.Model object at 0x149177a5b4d0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_N_HT_10', {'model': <pymc.model.core.Model object at 0x14919e1710f0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_N_HT_5', {'model': <pymc.model.core.Model object at 0x1491912d3490>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_T_HN_10', {'model': <pymc.model.core.Model object at 0x1491912d2190>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_T_HT_10_10', {'model': <pymc.model.core.Model object at 0x1492013468b0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_T_HT_10_5', {'model': <pymc.model.core.Model object at 0x14917608fce0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_T_HN_5', {'model': <pymc.model.core.Model object at 0x14917608df30>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_T_HT_5_10', {'model': <pymc.model.core.Model object at 0x14917608de00>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('N_T_HT_5_5', {'model': <pymc.model.core.Model object at 0x14917608d6e0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_N_HN_10', {'model': <pymc.model.core.Model object at 0x14917608e520>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_N_HT_10_10', {'model': <pymc.model.core.Model object at 0x14917608e2c0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_N_HT_10_5', {'model': <pymc.model.core.Model object at 0x14917608e3f0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HN_10_10', {'model': <pymc.model.core.Model object at 0x14917608d940>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_10_10_10', {'model': <pymc.model.core.Model object at 0x14917608e780>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_10_10_5', {'model': <pymc.model.core.Model object at 0x14917608fbb0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HN_10_5', {'model': <pymc.model.core.Model object at 0x14917608dba0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_10_5_10', {'model': <pymc.model.core.Model object at 0x14917608e190>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_10_5_5', {'model': <pymc.model.core.Model object at 0x14917608f230>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_N_HN_5', {'model': <pymc.model.core.Model object at 0x14917608d810>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_N_HT_5_10', {'model': <pymc.model.core.Model object at 0x14917608dcd0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_N_HT_5_5', {'model': <pymc.model.core.Model object at 0x1491676a48a0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HN_5_10', {'model': <pymc.model.core.Model object at 0x14917608f5c0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_5_10_10', {'model': <pymc.model.core.Model object at 0x14917608efd0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_5_10_5', {'model': <pymc.model.core.Model object at 0x14917608fa80>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HN_5_5', {'model': <pymc.model.core.Model object at 0x14917608e650>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_5_5_10', {'model': <pymc.model.core.Model object at 0x1491676a56e0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None}), ('T_T_HT_5_5_5', {'model': <pymc.model.core.Model object at 0x1491676a5ba0>, 'trace': Inference data with groups:

> posterior

> sample_stats

> observed_data, 'scores': None})])Log-lik, WAIC and PPC

for name, model_dict in model_traces.items():

# model_dict.keys(): {'model', 'trace', 'scores'}

try:

print(name)

pm.compute_log_likelihood(

model_dict['trace'],

model=model_dict['model'],

progressbar=False)

except Exception as e:

print(f"{name} failed computing log_likelihood: {e}")

try:

prior_predictive = pm.sample_prior_predictive(

1000,

model=model_dict['model'])

model_dict['trace'].extend(prior_predictive)

except Exception as e:

print(f"{name} failed sampling prior predictive: {e}")

try:

posterior_predictive = pm.sample_posterior_predictive(

model_dict['trace'],

model=model_dict['model'],

progressbar=False)

model_dict['trace'].extend(posterior_predictive)

except Exception as e:

print(f"{name} failed sampling posterior predictive: {e}")

try:

loo = az.loo(model_dict['trace'])

waic = az.waic(model_dict['trace'])

model_dict['scores'] = {

"loo": loo,

"waic": waic

}

except Exception as e:

print(f"{name} failed during loo/waic: {e}")N_N_HNSampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_N_HT_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_N_HT_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_T_HN_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_T_HT_10_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_T_HT_10_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_T_HN_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_T_HT_5_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(N_T_HT_5_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_N_HN_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_N_HT_10_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_N_HT_10_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HN_10_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HT_10_10_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HT_10_10_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HN_10_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HT_10_5_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HT_10_5_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_N_HN_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_N_HT_5_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_N_HT_5_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HN_5_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HT_5_10_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HT_5_10_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HN_5_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]T_T_HT_5_5_10Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(T_T_HT_5_5_5Sampling: [eps, grw_sigma, mu, sigma, y_hat]

Sampling: [y_hat]

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1045: RuntimeWarning: overflow encountered in exp

weights = 1 / np.exp(len_scale - len_scale[:, None]).sum(axis=1)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/numpy/_core/_methods.py:52: RuntimeWarning: overflow encountered in reduce

return umr_sum(a, axis, dtype, out, keepdims, initial, where)

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:797: UserWarning: Estimated shape parameter of Pareto distribution is greater than 0.70 for one or more samples. You should consider using a more robust model, this is because importance sampling is less likely to work well if the marginal posterior and LOO posterior are very different. This is more likely to happen with a non-robust model and highly influential observations.

warnings.warn(

/home/sojern/miniconda3/envs/pymc/lib/python3.13/site-packages/arviz/stats/stats.py:1655: UserWarning: For one or more samples the posterior variance of the log predictive densities exceeds 0.4. This could be indication of WAIC starting to fail.

See http://arxiv.org/abs/1507.04544 for details

warnings.warn(Save the results

import os.path as op

import os

import cloudpickle

# Save the traces to file (netcdf)

dir = "../results"

for name, d in model_traces.items():

# Save the model.Model as a cloudpickle.dumps

os.makedirs(op.join(dir, "models"), exist_ok=True)

model_path = op.join(dir, "models", f"{name}_model.pkl")

# print(model_path)

with open(model_path, "wb") as f:

cloudpickle.dump(d['model'], f)

# Save the trace as a netcdf file

trace_path = op.join(dir, "traces", f"{name}_trace.nc")

os.makedirs(op.dirname(trace_path), exist_ok=True)

az.to_netcdf(d['trace'], trace_path)

# Load model

with open("../results/models/N_N_HN_model.pkl", "rb") as f:

test_model = cloudpickle.load(f)

# Load trace

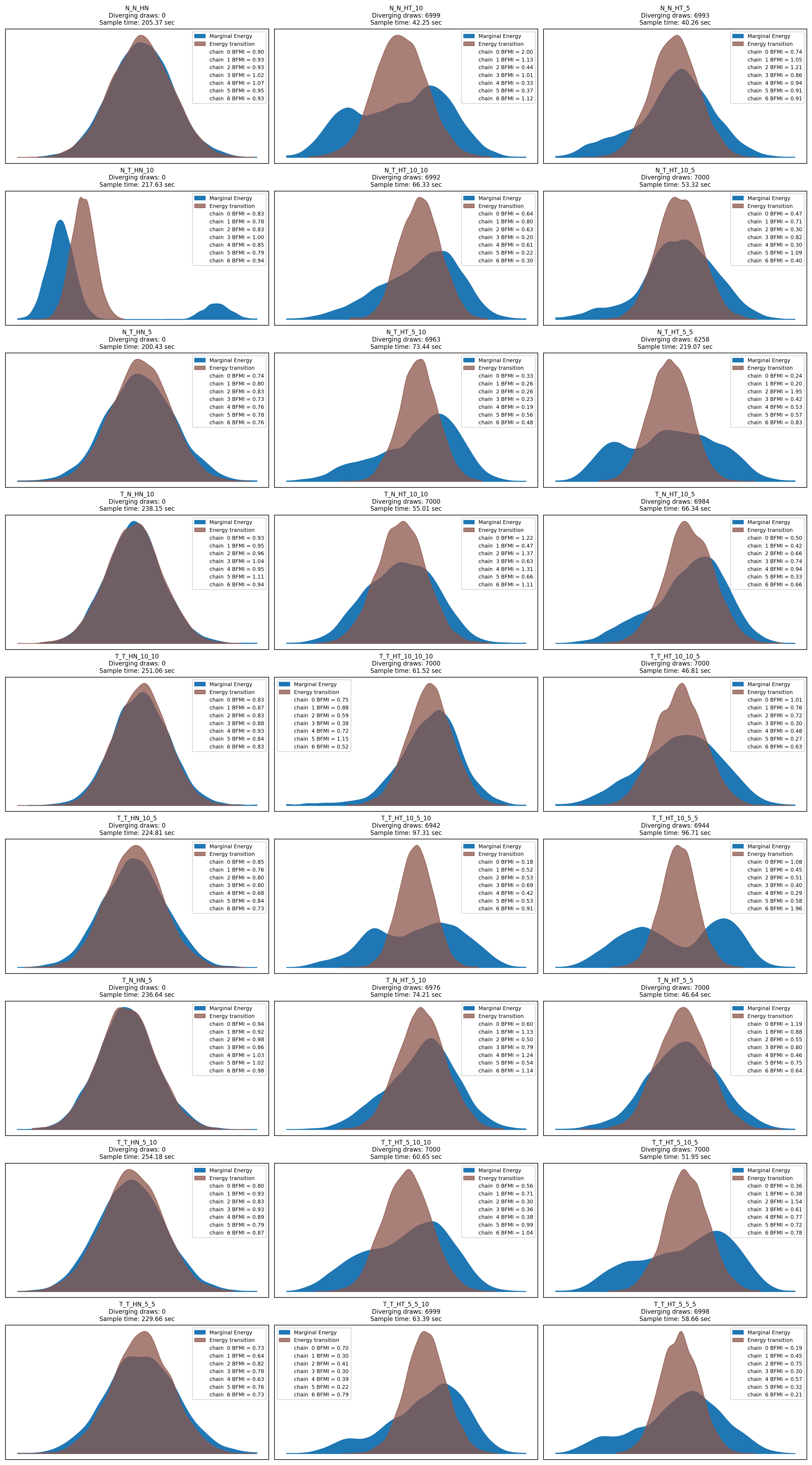

test_trace = az.from_netcdf("../results/traces/N_N_HN_trace.nc")from math import ceil

# Plot the energy for all traces

ncol = 3

nrows = ceil(len(model_traces) / ncol)

f, axs = plt.subplots(nrows=nrows, ncols=ncol, figsize=(15, 3*nrows))

axs = axs.flatten()

for i, (name, d) in enumerate(model_traces.items()):

ax = axs[i]

az.plot_energy(d['trace'], ax=ax)

ax.set_title(name + "\n" +

f"Diverging draws: {d['trace'].sample_stats.diverging.sum().values}\n" +

f"Sample time: {d['trace'].sample_stats.sampling_time:.2f} sec"

)

# Remove empty subplots

[f.delaxes(ax) for ax in axs if not ax.has_data()]

plt.tight_layout()

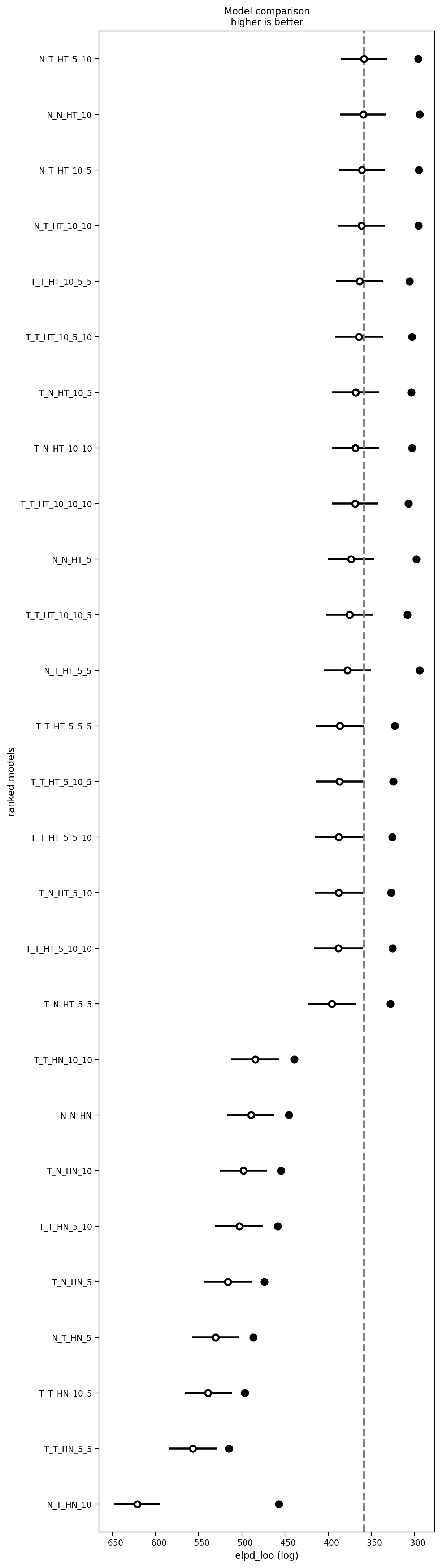

# az.plot_energy(test_trace);# Combine into InferenceData comparison object

loo_dict = {name: model_traces[name]['scores']['loo'] for name in model_traces.keys()}

cmp_df = az.compare(loo_dict, method="stacking", ic="loo")

# Save the comparison DataFrame

cmp_df.to_csv("../results/model_comparison.csv", )

# Print the comparison DataFrame

print("\nModel Comparison Results:")

cmp_df

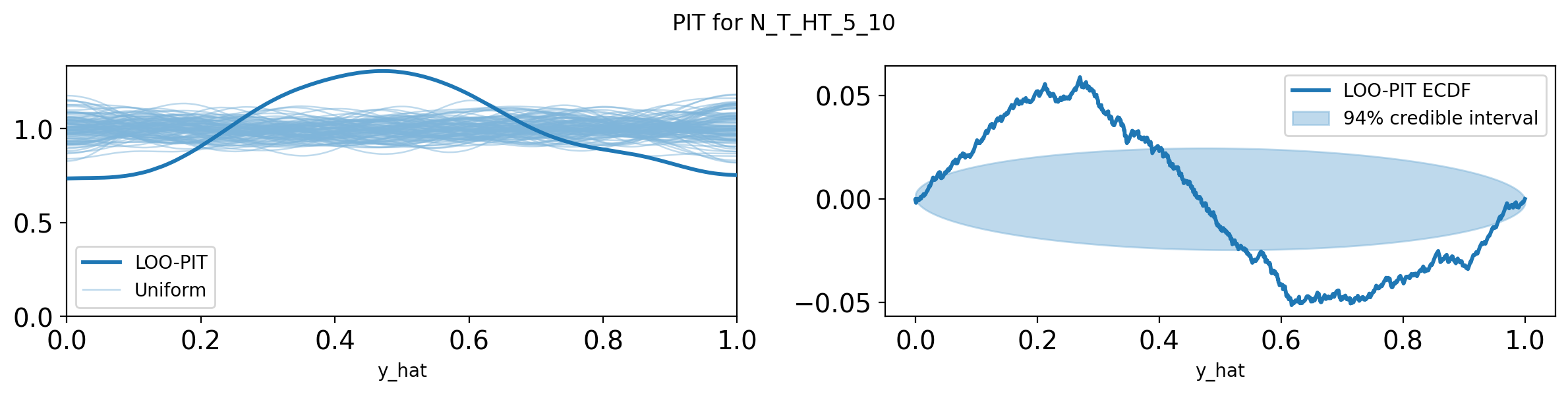

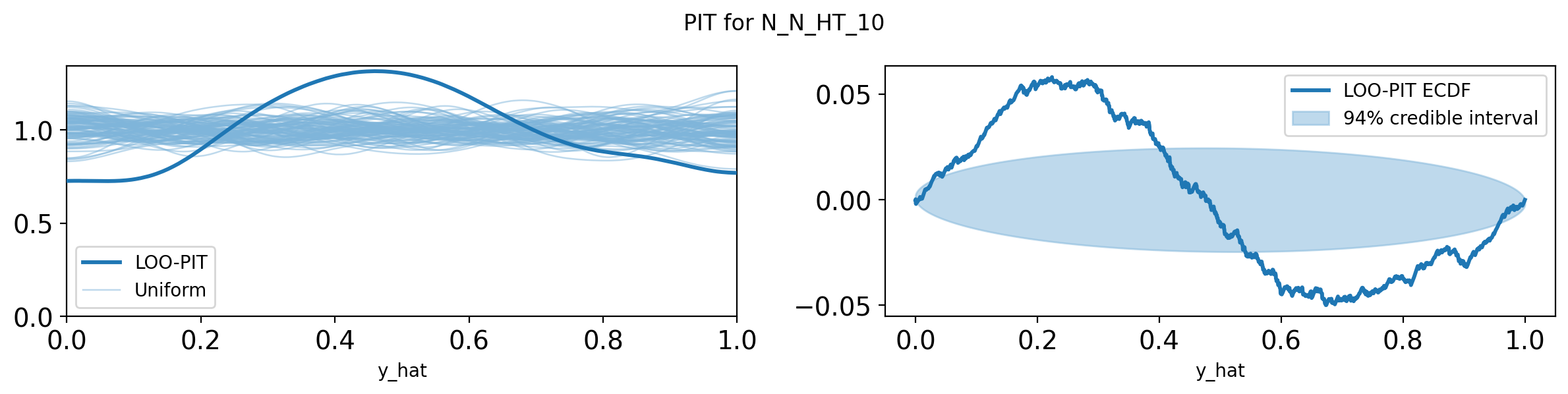

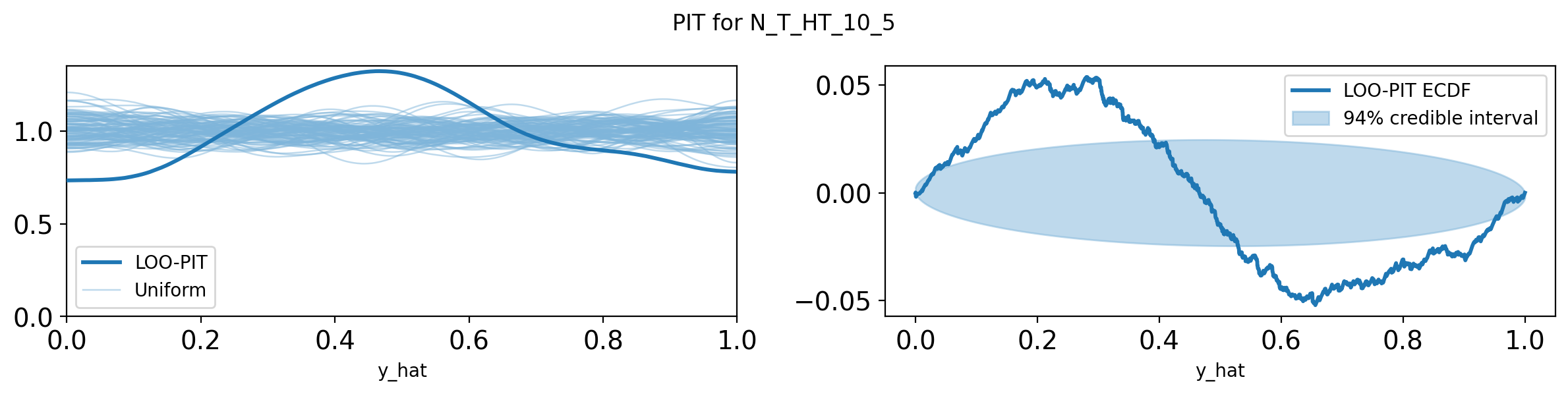

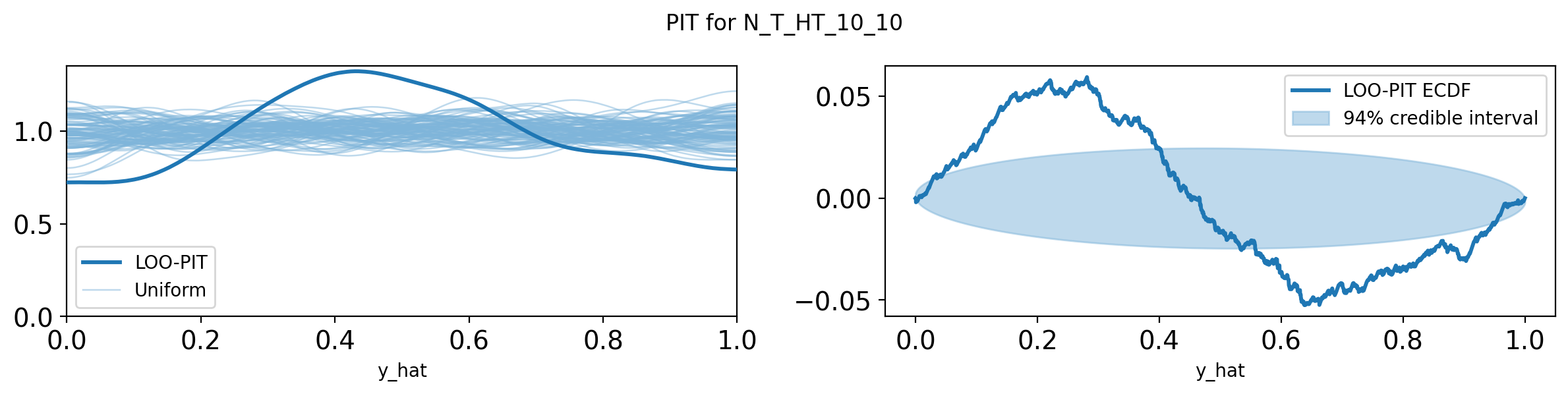

Model Comparison Results:| rank | elpd_loo | p_loo | elpd_diff | weight | se | dse | warning | scale | |

|---|---|---|---|---|---|---|---|---|---|

| N_T_HT_5_10 | 0 | -13.942824 | 112.407689 | 0.000000 | 0.501709 | 26.172102 | 0.000000 | True | log |

| N_T_HT_10_10 | 1 | -14.232323 | 115.634082 | 0.289498 | 0.232978 | 25.852563 | 4.944408 | True | log |

| N_T_HT_10_5 | 2 | -15.196429 | 113.624439 | 1.253604 | 0.265313 | 25.562472 | 5.420416 | True | log |

| T_N_HT_10_5 | 3 | -29.299767 | 103.590498 | 15.356943 | 0.000000 | 26.574965 | 5.712471 | True | log |

| T_T_HT_10_10_10 | 4 | -30.656224 | 110.373495 | 16.713400 | 0.000000 | 26.435805 | 6.042163 | True | log |

| N_T_HT_5_5 | 5 | -33.954243 | 134.999306 | 20.011419 | 0.000000 | 26.360560 | 7.147779 | True | log |

| T_N_HT_10_10 | 6 | -39.808868 | 110.980355 | 25.866044 | 0.000000 | 26.492594 | 5.556646 | True | log |

| T_T_HT_10_5_5 | 7 | -46.613526 | 115.610006 | 32.670702 | 0.000000 | 26.806032 | 6.565634 | True | log |

| T_T_HT_10_10_5 | 8 | -48.198680 | 120.920450 | 34.255855 | 0.000000 | 26.793137 | 7.001829 | True | log |

| T_T_HT_5_10_5 | 9 | -59.106328 | 105.086513 | 45.163503 | 0.000000 | 27.142046 | 6.035451 | True | log |

| T_T_HT_5_5_5 | 10 | -59.685208 | 102.204978 | 45.742384 | 0.000000 | 27.823967 | 7.678840 | True | log |

| T_T_HT_5_10_10 | 11 | -60.728334 | 102.599512 | 46.785510 | 0.000000 | 27.133034 | 6.249436 | True | log |

| T_N_HT_5_5 | 12 | -67.525844 | 107.832978 | 53.583020 | 0.000000 | 27.624499 | 7.079179 | True | log |

| N_T_HN_10 | 13 | -173.060588 | 68.185244 | 159.117764 | 0.000000 | 25.601244 | 7.208652 | False | log |

| N_N_HT_10 | 14 | -174.314027 | 221.807800 | 160.371203 | 0.000000 | 25.234972 | 7.764120 | True | log |